A robust online presence hinges on a meticulously optimized technical foundation. In the dynamic realm of search engine optimization (SEO), the ability to precisely evaluate a website’s technical health is not merely advantageous; it is essential. Screaming Frog SEO Spider stands as a powerful, professional-grade desktop application specifically engineered for comprehensive website auditing. It systematically crawls websites, meticulously emulating the behavior of search engine bots such as Googlebot and Bingbot.1 This direct simulation provides a critical advantage: if a search engine bot encounters an issue, Screaming Frog will similarly identify it, allowing SEO professionals to preemptively detect and rectify problems before search engines can penalize or fail to index crucial pages.1

The primary objective of this indispensable tool is to delve deep into a site’s URLs, meticulously evaluating elements like meta tags, header tags, and internal links to pinpoint critical issues and assist in Technical SEO Audit. It demonstrates exceptional proficiency in uncovering elusive technical problems, including broken links, problematic redirects, and instances of duplicate content, which might otherwise evade manual detection.1 Available across Windows, macOS, and Linux operating systems, Screaming Frog ensures broad accessibility for SEO professionals seeking to bolster search rankings and enhance user experience.1 Its distinctive hybrid storage engine efficiently manages crawl data by saving it both in RAM and on disk, a capability that enables the tool to effectively crawl websites ranging from small to exceptionally large, accommodating millions of URLs.1 Furthermore, for modern, dynamic websites built with JavaScript frameworks like Angular, React, or Vue.js, Screaming Frog offers robust JavaScript rendering capabilities. It utilizes a headless Chromium browser to execute JavaScript, constructing the Document Object Model (DOM) to uncover content and links that would not be visible in the raw HTML.1

The fundamental design of Screaming Frog, which precisely mirrors how search engine bots operate, establishes its crucial role in technical SEO audits. This direct emulation means that optimizing a website based on Screaming Frog’s findings directly translates to improved crawlability and indexability for actual search engines, ultimately leading to enhanced search visibility and performance. This fosters a proactive rather than reactive approach to maintaining a healthy and high-ranking online presence. The ability to identify issues exactly as search engines perceive them transforms SEO from a reactive firefighting exercise into a preventative strategy, ensuring sustained organic growth rather than simply recovering from penalties. This guide will walk through setting up Screaming Frog, leveraging its core features, performing various audit tasks, and applying its findings for strategic SEO gains.

Screaming Frog’s Core Capabilities: Your Essential Technical SEO Toolkit

Screaming Frog SEO Spider is equipped with a comprehensive suite of features that form an essential toolkit for any SEO professional. These capabilities extend beyond basic crawling to provide in-depth analysis and strategic understanding.

Comprehensive Site Crawling & Auditing Capabilities

The tool offers real-time data display, populating URLs as they are discovered during a crawl, which provides immediate insights into a website’s technical SEO health.1 It collects an extensive array of data on URLs, page titles, meta descriptions, header tags, broken links, redirects, and duplicate content.1 A key strength lies in its issue prioritization framework, categorizing findings as “Issues” (critical errors requiring immediate attention), “Warnings” (potential problems that should be investigated), and “Opportunities” (areas for optimization and improvement). These categories are often assigned priority levels (High, Medium, Low) based on their potential impact on SEO performance.1

The sheer volume of data provided by Screaming Frog could be overwhelming without proper organization.1 However, the categorization into “Issues, Warnings, Opportunities” and the assignment of “Priority” transforms this raw data into a clear diagnostic roadmap. This structured approach guides SEO professionals from data overload to actionable problem-solving, making the tool highly efficient even for complex audits. This built-in prioritization helps SEOs focus their efforts on high-impact fixes first, optimizing their time and resources for maximum return on investment in their SEO strategies. This is not merely a data organization feature; it is a strategic decision-making framework that aligns SEO efforts with business objectives by ensuring the most critical issues with the highest potential impact on performance are addressed first.

Customizable Crawl Settings & JavaScript Rendering

Screaming Frog provides granular control over the crawling process, allowing users to define precisely what elements are crawled. This includes options to target specific subfolders, crawl only HTML, or include/exclude resources like images, CSS, and JavaScript using “include” and “exclude” functions.1 Users can set various crawl limits, such as the total number of URLs, crawl depth, and the number of query string parameters, enabling focused or manageable crawls, especially for very large sites.1 The tool also supports user-agent switching, allowing it to crawl as different bots (e.g., Googlebot, Bingbot, or custom user agents) to simulate various crawler behaviors and identify potential issues specific to certain search engines.1

For modern websites heavily reliant on client-side JavaScript, the JavaScript rendering feature is indispensable.1 It renders pages in a headless browser, executing JavaScript to construct the Document Object Model (DOM) before crawling. This process reveals content and links that would not be discoverable in the raw HTML, providing a more accurate representation of what search engines see.1 Without this feature, a significant portion of content and internal links on dynamic sites would be missed, leading to incomplete and inaccurate audit results. This capability is a critical adaptation to the evolving web landscape, ensuring that Screaming Frog’s crawl truly mirrors what search engines see on dynamic sites, thereby preventing major blind spots in technical audits. For SEO professionals, this implies a necessary shift in how they approach auditing, recognizing that raw HTML alone is no longer sufficient for comprehensive analysis, directly impacting the fidelity of their audit data. Best practices for JavaScript rendering involve configuring the rendering mode to ‘JavaScript’, setting an appropriate user-agent and window size, ensuring all necessary resources are enabled for crawling, and diligently monitoring the ‘Pages with Blocked Resources’ filter in the JavaScript tab.1

Powerful API Integrations

Screaming Frog significantly enhances its analytical capabilities through seamless API integrations with external platforms such as Google Analytics (GA), Google Search Console (GSC), PageSpeed Insights (PSI), Ahrefs, Majestic, and Moz.1 This connectivity allows the tool to fetch user and performance data from GA and GSC, as well as link data from Ahrefs, Majestic, and Moz, directly into the crawl results.1 This provides a holistic view of a website’s health, enabling SEO professionals to prioritize fixes and optimization efforts based on real-world metrics like revenue, conversions, traffic, impressions, and clicks.1

While raw technical data, such as a 404 error, is inherently valuable, its true impact is fully understood when contextualized with performance metrics. Integrating data from GA and GSC allows SEO professionals to determine whether a broken link resides on a high-traffic page or if a non-indexed URL is still generating significant impressions.1 This ability to establish a direct connection between technical health and business impact is crucial for strategic decision-making. The integration transforms a technical audit from a mere checklist of errors into a strategic business tool. It empowers SEO professionals to justify their recommendations with tangible business value, allowing teams to focus on fixes that will yield the most significant positive impact on organic traffic, user engagement, and conversion rates, thus elevating SEO from a technical function to a core business driver. After integrating Google Analytics 4, Screaming Frog adds columns such as Sessions, Engaged Sessions, Engagement Rate, Views, Conversions, Event Count, and Total Revenue, with up to 65 metrics from the GA4 API selectable.1 For Google Search Console integration, columns like Clicks, Impressions, CTR, and Position are added from the Search Analytics API. If the URL Inspection API is enabled, additional columns include Summary (e.g., ‘URL is on Google’, ‘URL is not on Google’), Coverage (reason for status), Last Crawl, Crawled As, Crawl Allowed, Indexing Allowed, User-Declared Canonical, Google-Selected Canonical, Mobile Usability, Mobile Usability Issues, AMP Results, AMP Issues, Rich Results, Rich Results Types, Rich Results Types Errors, and Rich Results Warnings.1

The following table summarizes key API integrations and their strategic value:

| API/Tool | Key Data Points Fetched | Direct SEO Benefit/Use Case |

| Google Analytics 4 | Sessions, Engaged Sessions, Conversions, Total Revenue | Prioritize technical fixes based on actual traffic and revenue impact; identify high-value pages with technical issues. |

| Google Search Console | Clicks, Impressions, CTR, Position, Indexing Allowed, Canonical | Understand search performance context for technical errors; identify non-indexed high-impression pages; validate canonicalization. |

| Ahrefs, Majestic, Moz | Backlinks, Referring Domains, Anchor Text | Analyze link equity distribution for internal linking strategies; identify lost link value from broken external links. |

Advanced Custom Extraction

One of the most powerful features of Screaming Frog is its custom extraction capability, which allows users to scrape virtually any specific information from a website in bulk.1 This functionality supports various methods, including CSS Path (straightforward for those familiar with CSS selectors), XPath (a more precise method particularly useful for complex HTML structures), and Regex (regular expressions for flexible text pattern matching).1 The setup for custom extraction is accessed via “Configuration > Custom > Extraction”.1

With the release of version 21, Screaming Frog upgraded its support for XPath to versions 2.0, 3.0, and 3.1, unlocking a suite of powerful advanced functions.1 These include

string-join() to combine multiple pieces of content, distinct-values() to extract unique values, starts-with()/ends-with() to filter results, matches() for combined XPath with Regex, exists() to check for HTML snippet presence, format-dateTime() for date formatting, if() for conditional extraction, and tokenize() to split values by a delimiter.1

This advanced custom extraction feature, especially with XPath’s expanded functionality, transforms Screaming Frog from a standard crawler into a highly flexible data scraping and analysis tool.1 It moves beyond predefined SEO metrics, empowering users to define and extract any data point relevant to their specific audit or research needs.1 This capability allows SEO professionals to function as data scientists. It enables highly specialized audits, such as competitive analysis of specific pricing structures, identification of missing custom attributes, or validation of unique tracking codes. This provides a significant competitive edge and allows for deep, bespoke data analysis that off-the-shelf reports might overlook, uncovering unique information tailored to specific business requirements.

The table below illustrates various custom extraction methods and their advanced use cases:

| Extraction Method | Example Query/Function | Practical Use Case | SEO Advantage |

| CSS Path | h2.product-title | Scrape all product H2s on an e-commerce site. | On-page content audit; identify keyword targeting. |

| XPath (Advanced) | string-join(//div[@class=’price’]/text(), ‘, ‘) | Consolidate fragmented pricing data from complex HTML. | Competitive pricing analysis; identify pricing discrepancies. |

| Regex | matches(., ‘utm_source=’) | Identify all URLs containing specific UTM parameters. | Campaign tracking validation; identify untracked links. |

| XPath (Advanced) | exists(//meta[@name=’robots’ and @content=’noindex’]) | Check for the presence of a noindex tag on specific pages. | Ensure correct indexation directives; prevent accidental de-indexing. |

Visualizations, Reports & Data Export Options

Screaming Frog offers interactive crawl visualizations, including ‘force-directed crawl diagrams’, ‘3D force-directed crawl diagrams’, and ‘crawl tree graphs’, which provide a visual representation of site architecture and the shortest paths to pages.1 Directory tree visualizations further break down URLs into their components, helping to expose structural flaws within the website.1

All collected data can be exported into various spreadsheet formats for further analysis.1 The tool provides “Bulk Export” options for different reports and a “Multi-Export” feature, allowing users to select and export data from multiple tabs or reports in a single click.1 Additionally, Screaming Frog can crawl and parse PDF documents, extracting their properties, reviewing content (such as word count, readability, and spelling/grammar), and auditing internal and external links within them.1

While Screaming Frog provides immense data depth, the inclusion of interactive visualizations and flexible export options addresses the challenge of data interpretation. Raw data tables can often be overwhelming; visualizations make complex site structures comprehensible, and diverse export formats facilitate further analysis in other specialized tools.1 These features significantly enhance the communication of complex technical issues to non-technical stakeholders, fostering better collaboration and accelerating the implementation of SEO recommendations.1 This is not just about data presentation; it is about fostering better collaboration and accelerating the implementation of SEO recommendations. By making complex technical findings accessible and understandable, Screaming Frog helps bridge the gap between SEO teams and management or development teams, ensuring that identified issues are not just found but also acted upon effectively, leading to faster and more impactful SEO improvements. The ability to export raw data also positions Screaming Frog as a powerful data source for creating custom dashboards and executing advanced analytics workflows.

Step-by-Step: Performing a Complete Technical SEO Audit with Screaming Frog

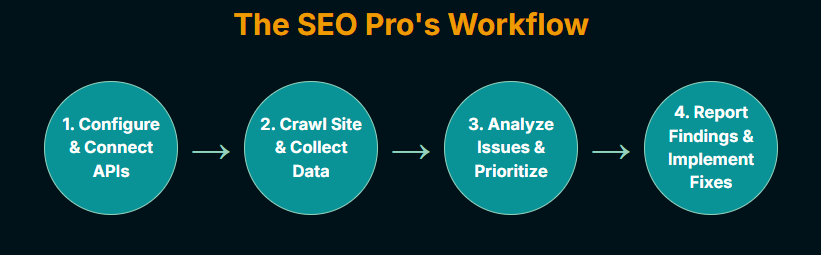

Conducting a complete technical SEO audit with Screaming Frog involves a systematic approach, from initial setup to detailed analysis of critical issues.

Getting Started: Setup & Configuration

Before initiating a crawl, proper setup and configuration are essential for optimal performance and accurate data collection.

Installation & Memory Allocation:

The first step involves downloading and installing the Screaming Frog SEO Spider software. For crawling millions of URLs, recommended hardware specifications include a 64-bit operating system, a Solid State Drive (SSD) of 500GB or 1TB, and 16GB of RAM.1 Utilizing database storage mode is essential for optimal performance on large crawls.1 Memory allocation can be adjusted within the application settings (

File > Settings > Memory Allocation). For crawls up to 2 million URLs, allocating 4GB of RAM is recommended, ensuring at least 2-4GB of free RAM is left for the system.1 A restart of the application is required for changes to take effect.1

Basic Crawl Settings:

To begin a crawl, simply enter the URL of the website to be audited in the “Enter URL to spider” box and click “Start.” Users can configure basic options, such as whether to crawl only HTML content or include all resources like images, CSS, and JavaScript.

Integrating Analytics & Search Console for Prioritization:

A crucial step in setting up a comprehensive audit is integrating Screaming Frog with Google Analytics and Google Search Console via Configuration > API Access. This connection allows the tool to fetch user and performance data from these platforms, enriching the crawl data with real-world metrics.1 Users can select relevant metrics such as Sessions, Engaged Sessions, and Conversions from GA4, or Clicks, Impressions, and Position from GSC.1 By doing this before or during the audit, the raw technical data is immediately contextualized with business metrics. This means that from the very beginning of the audit, an SEO professional can identify not just what is broken, but what broken elements are impacting the business most. This shifts the entire audit workflow from a generic checklist to a highly targeted, ROI-focused exercise, ensuring that the most impactful fixes are identified and prioritized from day one, optimizing resources and accelerating results.

Identifying Critical Technical Issues (The Core Audit)

Screaming Frog’s strength lies in its ability to pinpoint a wide array of technical issues that can impede search engine performance and user experience.

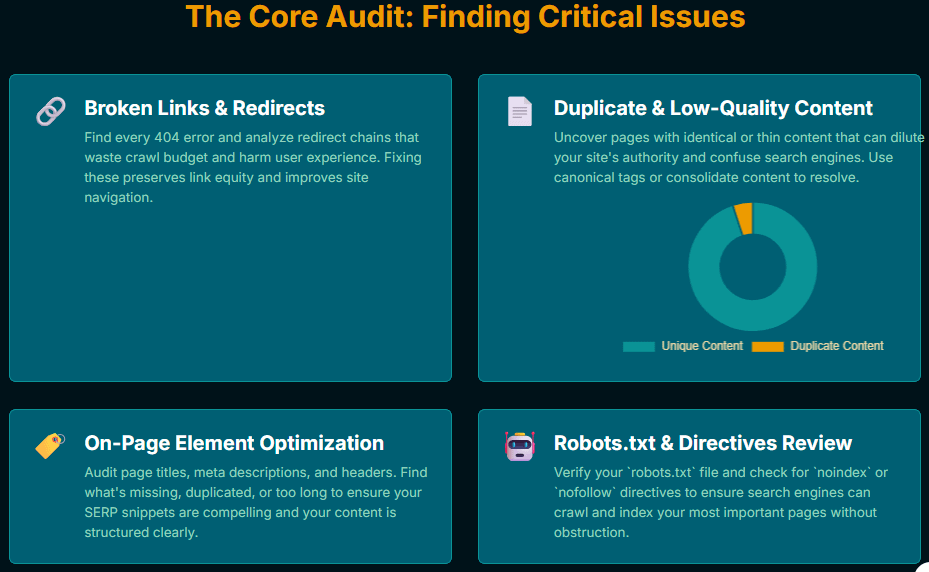

Broken Links & Redirect Chains

The tool can instantly crawl a website to pinpoint broken links (404 errors) and server errors.1 It also excels at auditing redirects, enabling the identification of temporary (302) and permanent (301) redirects, as well as detecting complex redirect chains and loops.1 To identify these, navigate to the ‘Response Codes’ tab and apply the ‘Client Error (4XX)’ filter to locate broken links.1 For redirects, utilize the ‘Redirection (3XX)’, ‘Redirect Chain’, and ‘Redirect Loop’ filters.1 The identified errors and their source URLs can be bulk exported for efficient remediation by developers.1 Fixing these issues proactively prevents users from encountering dead ends, preserves link equity, and improves crawl efficiency by eliminating unnecessary hops. Unaddressed broken links and inefficient redirects are direct drains on a site’s SEO performance, impacting both search engine crawlability and user satisfaction. The ability to efficiently identify and export these issues for remediation is critical for maintaining site health and maximizing organic visibility.

Auditing Site Architecture & Crawl Depth

Screaming Frog assists in analyzing internal linking structures to understand how PageRank (or link equity) flows throughout a site and to identify pages buried deep within the site architecture.1 To assess crawl depth, use the ‘Internal’ or ‘Links’ tab and examine the ‘Crawl Depth’ column.1 Sorting this column from high to low reveals pages that are many clicks away from the homepage, indicating a need for more prominent internal links.1 The deeper a page is within the site structure, the less likely it is to be crawled, indexed, and ranked effectively.1 This directly implies that important pages buried deep are under-optimized for organic visibility. This highlights a need for more prominent internal links. This audit is not just about structural neatness; it is about unlocking hidden organic potential. By improving internal linking to deeply buried, high-value pages, SEO professionals can significantly boost their crawlability, indexability, and ultimately, their search rankings. It is a direct path to improving PageRank flow and ensuring that valuable content is discovered and prioritized by search engines, impacting overall site performance and user engagement. Visualizing the site architecture through ‘crawl tree graph’ or ‘force-directed crawl diagram’ can further illuminate structural issues.1

Detecting Duplicate & Near-Duplicate Content

The software can analyze a website for both exact duplicate pages (identified via MD5 hash values) and near-duplicate content, which are pages with a high degree of similarity.1 To leverage this feature, enable ‘Near Duplicates’ under

Config > Content > Duplicates and refine the ‘content area’ settings to specify which parts of the page content should be considered for similarity analysis.1 After the crawl, the ‘Content’ tab will display similarity matches, indicating pages that are either identical or highly similar.1 A perfect content match will show a 100% similarity score with one near duplicate.1 Duplicate or near-duplicate content can confuse search engines, making it difficult for them to determine which version of a page to index and rank, potentially leading to diluted PageRank and lower visibility.1 This feature is crucial not just to avoid penalties, but to consolidate and maximize the authority of unique content. Every instance of duplicate content is a missed opportunity to strengthen a single, authoritative page. Addressing this ensures search engines correctly understand and value the intended content, improving crawlability, indexability, and overall search performance by preventing the dilution of PageRank and keyword targeting. Actionable steps include consolidating content, using canonical tags, or rewriting content to ensure unique value.1

Optimizing On-Page Elements (Titles, Meta Descriptions, H1s, H2s)

Screaming Frog provides dedicated tabs to review and optimize crucial on-page elements such as page titles, meta descriptions, H1s, and H2s.1 These tabs allow users to identify issues like missing, duplicate, or excessively long elements.1 Filters such as ‘Missing’, ‘Duplicate’, and ‘Over X characters’ can be applied to quickly pinpoint these problems.1 These elements are crucial for search engine understanding, click-through rates (CTR), and user experience. They provide a 360-degree view of a website’s structure.1 Optimizing these elements is about maximizing the effectiveness of a website’s presence in search engine results pages (SERPs) and directly influencing user behavior. Well-crafted titles and meta descriptions improve click-through rates, while clear H1s and H2s enhance content readability and user experience. This directly impacts Answer Engine Optimization (AEO) by providing clear, concise information that search engines can easily extract, and improves overall user engagement and conversion potential. Actionable steps involve crafting unique, descriptive page titles and meta descriptions, and ensuring each page has a single, relevant H1 tag.1

Reviewing Robots.txt & Directives

The tool allows SEO professionals to assess how search engines interpret a website’s robots.txt file.1 It identifies URLs that are blocked from crawling by

robots.txt or those with noindex or nofollow directives in meta tags or HTTP headers.1 To verify

robots.txt compliance, examine the ‘Response Codes’ tab and apply the ‘Blocked by Robots.txt’ filter.1 The ‘Directives’ tab can be used to check for

noindex, nofollow, or none directives.1 It is important to remember that

robots.txt prevents crawling, not indexing; pages blocked from crawling might still appear in search results if linked elsewhere.1 Misunderstanding this distinction can lead to critical indexing errors, where sensitive pages might be indexed despite being blocked from crawling, or important pages might not be indexed because of an incorrect

noindex tag. This audit is vital for exercising precise control over how search engines interact with a site. It is not just about preventing errors, but about strategically guiding crawl budget and ensuring that only the intended, valuable pages are indexed. Incorrect directives can lead to wasted crawl budget on unimportant pages or, worse, prevent high-value content from ever appearing in search results, directly impacting organic visibility and business goals.

Validating Structured Data (Schema Markup)

Ensuring correct structured data implementation is vital for rich results in SERPs.1 Screaming Frog helps in verifying proper Schema markup by identifying validation errors and warnings related to Schema.org and Google’s rich result features.1 It checks if types and properties exist against Schema vocabulary and flags issues that prevent rich snippets from appearing.1 To enable this, ensure JSON-LD, Microdata, and RDFa formats are selected for extraction, and Schema.org validation is enabled under

Config > Spider > Extraction.1 Errors in structured data mean a website is missing out on opportunities for “rich results” in SERPs. This audit is crucial for unlocking significant competitive advantages in search results. Rich results dramatically increase visibility and click-through rates directly from the SERP. By systematically validating structured data, SEO professionals ensure their content is eligible for these enhancements, leading to improved organic performance and a superior user experience directly within the search interface. The ‘Structured Data’ tab, with its ‘Validation Errors’ and ‘Validation Warnings’ filters, provides detailed information on specific issues, including the validation type, severity, and a message on how to fix them.1 Users can export a summary of unique validation errors and warnings to further test them using external tools like Google’s Rich Results Test or Schema Markup Validator.1

The following table serves as a quick-reference guide for common technical issues and their corresponding Screaming Frog filters:

| Technical Issue | Screaming Frog Tab/Filter | SEO Impact | Remediation Goal |

| Broken Links (404s) | Response Codes > Client Error (4XX) | Lost link equity, poor user experience | Fix 404s; implement 301 redirects. |

| Redirect Chains/Loops | Response Codes > Redirect Chain / Redirect Loop | Slow page loading, diluted link equity | Streamline redirects to single 301s. |

| Duplicate Content | Content > Duplicates | Confuses search engines, diluted authority | Consolidate content; use canonical tags. |

| Missing H1s | H1 > Missing | Reduced search engine understanding, poor UX | Add unique, relevant H1s to each page. |

| Blocked by Robots.txt | Response Codes > Blocked by Robots.txt | Prevents crawling, potential indexing issues | Adjust robots.txt to allow crawling of important pages. |

| Schema Validation Errors | Structured Data > Validation Errors | Missed rich snippets, reduced CTR | Correct Schema markup to ensure eligibility for rich results. |

Beyond the Audit: Strategic Applications for Enhanced SEO

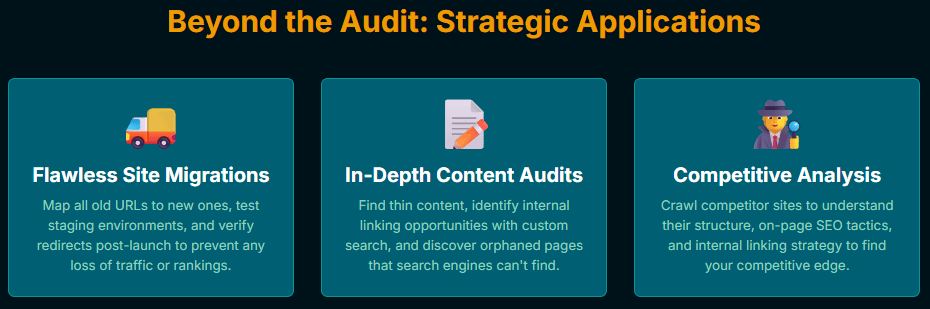

Screaming Frog’s utility extends beyond basic technical audits, offering powerful capabilities for strategic SEO initiatives that impact various aspects of a website’s performance.

Streamlining Site Migrations

Screaming Frog is an invaluable asset throughout the entire site migration process, from pre-migration planning to post-launch verification.1 Site migrations are inherently high-risk for SEO, often leading to significant drops in organic visibility if not managed perfectly.

Pre-Migration Audits & Data Collection: Before a migration, a complete crawl of the existing live website should be performed and stored as a benchmark.1 This crawl is crucial for verifying redirects post-launch and serves as a data backup for diagnosing any issues that may arise during the migration.1 It is essential to adjust the crawl configuration to collect additional data, such as hreflang, structured data, and AMP, which may be needed later.1 Integrating XML Sitemaps and connecting to Google Analytics and Search Console ensures that important orphan pages are not overlooked.1 User and conversion data from GA, search query performance data from GSC, and link data from tools like Ahrefs, Majestic, or Moz can be gathered to prioritize high-value pages.1

Testing Staging Environments & URL Mapping: Crawling the staging website allows for a thorough review of data and identification of differences, problems, and opportunities before launch.1 Screaming Frog can bypass common staging site restrictions (e.g., robots.txt, authentication) with appropriate configuration.1 The ‘Compare’ mode, coupled with ‘URL Mapping’, enables a direct comparison between the staging and live environments, even if URL structures have changed significantly.1 This feature allows for the comparison of overview data, issues, opportunities, site structure, and change detection between the two crawls.1

Identifying Content & Element Changes with Change Detection: The ‘Change Detection’ feature within ‘Compare’ mode is vital for monitoring alterations between crawls.1 It alerts users to changes in elements like page titles, meta descriptions, H1s, word count, crawl depth, and internal/external links.1 This is particularly useful for ensuring that critical elements remain consistent or change as intended during a migration.1 For example, current and previous page titles can be viewed side-by-side to analyze modifications.1

Post-Migration Verification & Redirect Audits: After launch, a crawl of the new website is essential to confirm crawlability and indexability.1 It is critical to ensure that the site and key pages are not blocked by

robots.txt or noindex directives.1 Every URL intended for ranking should display ‘Indexable’ in the ‘Indexability Status’ column.1 A crucial step is to audit 301 permanent redirects from old to new URLs to ensure proper passing of indexing and link signals, preventing loss of organic visibility.1 This can be done by switching to ‘List’ mode, enabling ‘Always Follow Redirects’, and uploading the list of old URLs to crawl.1 The ‘All Redirects’ report provides a comprehensive map of redirects, including the final destination URL and any redirect chains.1

Screaming Frog transforms site migrations from a high-stakes gamble into a controlled, data-driven process. It acts as an indispensable safety net, allowing SEO professionals to meticulously plan, execute, and verify the migration, thereby significantly de-risking the process and ensuring SEO continuity. This proactive management prevents catastrophic organic traffic losses and preserves accumulated domain authority, which is paramount for business stability during such a critical transition.

Conducting Comprehensive Content Audits

Screaming Frog offers robust capabilities for analyzing website content, extending beyond just technical elements.

Analyzing Content Quality & Readability: The tool can assess content quality by providing word counts, average words per sentence, and Flesch Reading Ease scores, helping to identify low-content pages or those with difficult readability.1 Spelling and grammar checks can also be enabled to flag errors within HTML pages and even PDFs.1 To perform this, ensure ‘Store HTML’ and ‘Store PDF’ (for PDFs) are enabled under

Config > Spider > Extraction, then enable ‘Spell Check’ and ‘Grammar Check’ under Config > Content > Spelling & Grammar.1

Identifying Internal Linking Opportunities: Screaming Frog is instrumental in identifying internal linking opportunities to strengthen content and improve PageRank flow.1 The ‘Custom Search’ feature can be used to find instances of target keywords (without existing anchor tags) on a website.1 This helps identify relevant pages that can link to “deeply buried” or “weakly linked” important pages, improving their accessibility and organic visibility.1 The ‘Link Score’ metric also helps determine a page’s relevance based on internal links, aiding in prioritizing pages for link building.1

Discovering Orphaned Pages: Orphaned pages are those that are not linked to internally from any other page on the website, making them difficult for search engines to discover and crawl.1 Screaming Frog can identify these pages by integrating data from external sources like Google Analytics, Search Console, or XML Sitemaps.1 The ‘Orphan URLs’ filter in the ‘Search Console’ or ‘Analytics’ tabs will highlight these pages.1 Once identified, these pages can either be linked internally, redirected, or removed if no longer relevant.1

Auditing PDF Documents: Screaming Frog can crawl and parse PDF files, extracting document properties, content, and links.1 This allows for auditing broken links within PDFs, reviewing their content for word count, readability, spelling, and grammar, and extracting properties like subject, author, and creation date.1 The tool can also bulk save PDF documents and export their raw text content.1

This aspect of Screaming Frog moves beyond pure technical health into content strategy and optimization. By identifying content gaps (low quality, orphaned) and internal linking opportunities, SEO professionals can directly improve the discoverability and authority of their content assets. It ensures that valuable content is not only technically sound but also strategically linked and accessible, maximizing its organic performance and user engagement, ultimately contributing to a more robust content ecosystem.

Competitive Analysis

Screaming Frog extends its utility beyond one’s own website, allowing for effective competitive analysis. By crawling a competitor’s website, SEO professionals can gain valuable insights into their site structure, internal linking strategies, and on-page optimization tactics.1 The tool can reveal what meta information, H1s, and H2s competitors are using, potentially uncovering new keywords or content angles.1 It can also indicate which of their pages are considered most important based on the volume of internal links they receive.1 While Screaming Frog provides raw data for competitive analysis, it requires manual interpretation to derive actionable strategies.1 This is not just about knowing what competitors are doing; it is about reverse-engineering their successful strategies to inform one’s own. By analyzing their site architecture and on-page elements, SEO professionals can identify gaps in their own strategy, discover new content opportunities, or refine their internal linking to compete more effectively. It provides a data-driven foundation for competitive intelligence, allowing businesses to gain a strategic edge in the SERPs by learning from and adapting to their top rivals.

Real-World Impact: Case Studies in Action

The practical application of Screaming Frog SEO Spider has yielded significant improvements in various real-world scenarios, demonstrating its profound impact on website performance and SEO outcomes.

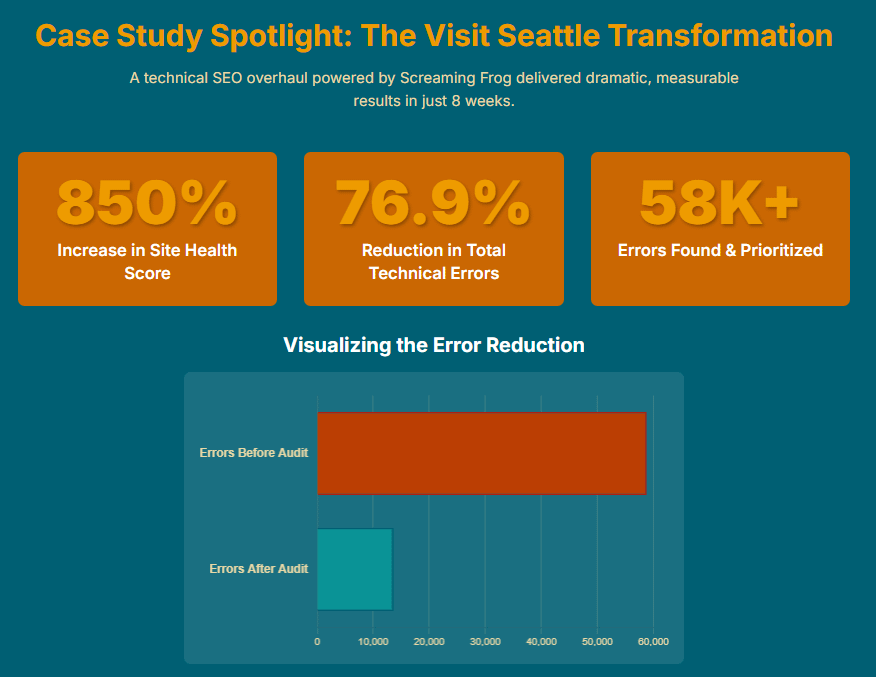

Visit Seattle: A Technical SEO Overhaul

Gravitate Design’s technical SEO case study for Visit Seattle highlights Screaming Frog’s instrumental role in a major site health improvement project.1 The website faced challenges including reduced visibility, missed opportunities due to outdated content, and low engagement rates.1

Initial Audit & Error Identification: Gravitate’s team initiated a comprehensive website analysis using Screaming Frog, alongside Ahrefs. This initial crawl uncovered a staggering 58,785 technical errors that were significantly hindering the site’s performance.1 High-priority issues identified included 404 errors, broken links, redirect chains, missing canonical tags, sitemap errors, orphaned pages, non-secure HTTP issues, and meta tag problems.1 These errors were systematically organized by their SEO impact, with critical issues prioritized for remediation.1

Sitemap Optimization: Screaming Frog was again crucial in auditing Visit Seattle’s sitemap structure. The audit revealed a bloated structure with 29 sitemaps containing outdated and duplicate content, which negatively impacted crawl efficiency.1 Leveraging the data from Screaming Frog, the team streamlined the sitemaps from 29 to 6 core sitemaps, removed 13 outdated and broken sitemaps, and noindexed 8 unnecessary ones.1 Additionally, canonical tags were added to 1,611 member listings to resolve duplicate content conflicts.1 This strategic cleanup, guided by Screaming Frog’s findings, aimed to focus search engines on indexing high-value pages, thereby improving crawl efficiency and rankings.1

Results: The 8-week SEO campaign delivered remarkable improvements. Visit Seattle’s site health score jumped from 8 to 76, an 850% improvement.1 The total number of technical issues dropped by 76.9%, from 58,785 to 13,609, and error pages fell from 7,817 to 616.1 This transformation turned a bloated website into a lean, ranking-optimized machine.1 This case study powerfully demonstrates that a systematic and thorough technical SEO audit, enabled by Screaming Frog, can be truly transformative. It highlights that even large, complex websites can harbor a massive number of hidden technical issues that severely impede performance. The dramatic improvements in health score and error reduction illustrate the direct correlation between technical hygiene and organic visibility, underscoring the immense return on investment achievable through dedicated technical SEO efforts.

guitarguitar: Enhancing Internal Linking & User Experience

The case of guitarguitar, a large e-commerce website, illustrates Screaming Frog’s utility in improving internal linking and user experience.1

Challenge: guitarguitar faced issues with key pages being several clicks deep from the homepage, leading to poor user experience and reduced PageRank flow to these important pages.1 While Screaming Frog’s crawl depth reporting identified these deeply buried pages, a quick solution was needed to identify linking opportunities across the vast website.1

Strategy with Custom Search: The client leveraged Screaming Frog’s custom search feature. They compiled a list of primary, secondary, and tertiary keywords for each deep page and used the custom search to find instances of these keywords on other pages that did not yet contain a hyperlink to the target page.1 This significantly accelerated the process of identifying internal linking opportunities.1

Results: The implementation of these internal linking improvements led to substantial enhancements in crawl depth and internal link distribution. The volume of pages located 3+ clicks away from the homepage continually declined.1 These changes positively impacted organic performance for key pages and across the entire site, leading to improved UX metrics such as reduced bounce rate and increased pages per session in Google Analytics.1 Screaming Frog proved to be a pivotal platform for efficient broken link fixing and internal linking opportunity identification on a large scale.1 This case study showcases that Screaming Frog is not just for fixing technical errors; it is a powerful tool for proactive optimization and strategic internal linking. It demonstrates how identifying and leveraging internal linking opportunities can directly improve PageRank flow, enhance content discoverability, and significantly boost user experience metrics, leading to a healthier and more performant website even without major “errors” being present. It highlights the tool’s versatility in driving continuous improvement.

Frequently Asked Questions (FAQs)

This section addresses common questions about Screaming Frog SEO Spider, covering aspects from licensing to troubleshooting. This section is crucial for building user confidence and reducing barriers to adoption. By proactively addressing practical and technical hurdles, this report demonstrates a deep understanding of the user’s journey with the tool. It makes the software feel more accessible and user-friendly, encouraging broader and more effective utilization, which ultimately contributes to the tool’s perceived value and the user’s success in their SEO endeavors.

Licensing & Usage

Is there a free version?

Yes, Screaming Frog offers a free version that allows crawling up to 500 URLs. This version provides basic features suitable for small-scale sites. To audit larger sites or access advanced features and configurations, a paid license is required.1

How much does a paid license cost?

A paid license typically starts around $279 per year. This removes the 500 URL crawl limit and unlocks all advanced capabilities, including saving crawls, custom extraction, and API integrations.1

How many users per license?

Each Screaming Frog license is for a single authorized individual user. While a single user can install the software on multiple devices, simultaneous use by different individuals will result in the license being blocked. Discounts are available for purchases of 5 or more licenses.1

What happens when a license expires?

Upon expiry, the SEO Spider reverts to the restricted free version, limiting crawls to 500 URLs, disabling configuration options, and preventing access to previously saved crawls. A new license purchase is necessary to restore full functionality.1

Why is my license key invalid?

The most common reason is incorrect entry. Users should ensure they copy and paste the exact username (which is typically lowercase and may differ from an email address) and license key provided in their account or license email. It is also crucial to ensure the correct license type (SEO Spider vs. Log File Analyser) is used for the respective tool.1

Installation & Performance

What are the recommended hardware specifications for large crawls?

For crawling millions of URLs, a 64-bit operating system, a Solid State Drive (SSD) of 500GB or 1TB, and 16GB of RAM are highly recommended. Utilizing database storage mode is essential for optimal performance on large crawls.1

Why does the installer take a while to start?

This is often due to security software like Windows Defender performing a scan. Running the installer directly from the downloads folder can sometimes expedite the process.1

How do I increase memory allocation?

Memory allocation can be adjusted within the application settings (File > Settings > Memory Allocation). For crawls up to 2 million URLs, allocating 4GB of RAM is recommended. It is advisable to leave at least 2-4GB of free RAM for the system. A restart of the application is required for changes to take effect.1

Can it crawl JavaScript websites?

Yes, Screaming Frog can render JavaScript-heavy pages using its integrated Chromium browser, allowing it to discover content and links that are built dynamically. This feature is available with a paid license.1

Troubleshooting Common Issues

How do I fix internal “No Response” issues?

These occur when internal URLs fail to return an HTTP response, often due to connection timeouts, errors, or refused connections. Solutions include checking for malformed URLs, adjusting user-agents, lowering crawl speed, temporarily disabling firewalls/proxies, or allowlisting the IP address on security platforms.1

How do I address “Mixed Content” issues?

Mixed content refers to HTTPS pages loading insecure HTTP resources. Screaming Frog identifies these in the ‘Security’ tab under the ‘Mixed Content’ filter. Remediation involves updating all insecure HTTP resources to HTTPS.1

How do I resolve “Duplicate Page Title” or “Missing H1” issues?

Navigate to the ‘Page Titles’ or ‘H1’ tabs, respectively. Filters like ‘Duplicate’ or ‘Missing’ will highlight the problematic URLs. The solution involves crafting unique, descriptive page titles and ensuring each page has a single, relevant H1 tag.1

Conclusion: Empowering Your SEO Journey with Screaming Frog

Screaming Frog SEO Spider stands as an unparalleled tool in the arsenal of any SEO professional. Its core strength lies in its ability to mimic search engine behavior, providing a precise, comprehensive, and actionable view of a website’s technical health.1 This direct emulation allows for the proactive identification and remediation of issues, ensuring that a site is optimally configured for crawlability and indexability.1

The tool’s extensive feature set, from its granular crawl controls and robust JavaScript rendering to its powerful API integrations and advanced custom extraction capabilities, transforms raw data into strategic intelligence.1 The categorization of issues by priority, coupled with visual representations and flexible export options, ensures that SEO efforts are focused on high-impact areas, optimizing resource allocation and driving tangible improvements in organic performance.1

Real-world case studies, such as the technical overhaul of Visit Seattle and the internal linking enhancements for guitarguitar, demonstrably illustrate the profound impact Screaming Frog can have on website visibility, user experience, and overall business objectives.1 Mastering Screaming Frog SEO Spider is not merely an advantage but a necessity for any organization committed to maximizing its online presence.1 It empowers teams to conduct thorough audits, streamline complex processes like site migrations, and gain a competitive edge through deep data analysis.1 As the digital landscape continues to evolve, the ability to understand and optimize a website from a search engine’s perspective, as facilitated by Screaming Frog, remains paramount for sustained organic growth and success.1 This guide serves as a foundational pillar, laying the groundwork for more specialized explorations into leveraging this powerful tool for complete technical SEO audits, demystifying crawl settings, integrating with analytics platforms, extracting structured data, and comparing it with other industry-leading solutions.1