The Strategic Imperative: Understanding Crawl Budget in the Modern Web

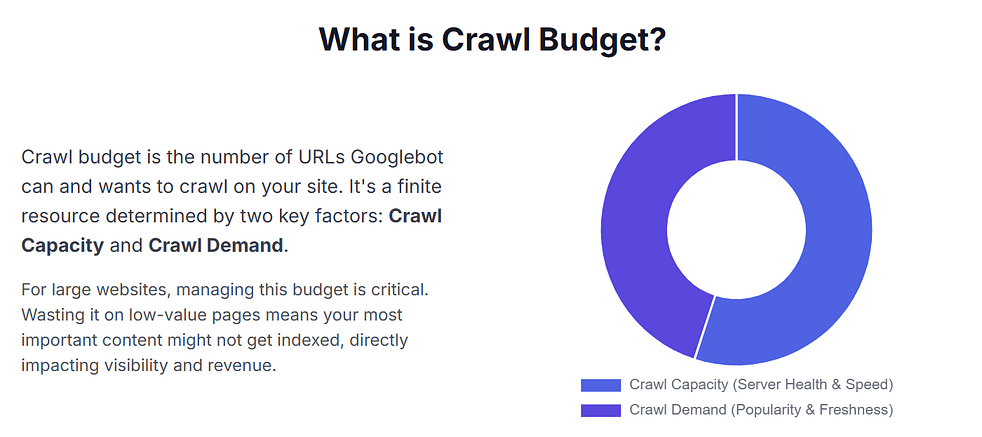

Maximizing your crawl budget explores digital landscape is a vast and ever-expanding space, far exceeding the capacity of search engines to explore and index every available URL.1 For this reason, search engines like Google must be discerning with the time and resources they allocate to each website. This allocation is a site’s “crawl budget,” a fundamental concept in technical SEO.2 However, to truly understand its significance, it must be viewed not merely as a technical metric but as a strategic resource for large, complex websites. The crawl budget represents the set of URLs that Googlebot both has the capacity to crawl and wants to crawl, thus the focus on Maximizing your crawl budget is of great importance.1 It is a resource that, when squandered, can have direct and measurable business consequences.

The allocation of a site’s crawl budget is governed by two primary, interlocking elements: crawl capacity limit and crawl demand.1 The crawl capacity limit is a function of a site’s technical health. Googlebot is designed to crawl a site without overwhelming its servers and will dynamically adjust its crawl rate to prevent performance degradation.1 If a site responds quickly and consistently, the limit can increase, allowing Googlebot to use more parallel connections to fetch pages. Conversely, if the site slows down or begins to return server errors, the limit decreases, and Googlebot will crawl less to avoid placing additional stress on the server.1 This dynamic behaviour means that a site’s technical performance has a direct and immediate impact on the crawl resources Google is willing to devote to it.1

On the other hand, crawl demand is Google’s assessment of how much it wants to crawl a site based on its perceived value and freshness.1 This demand is heavily influenced by factors such as a site’s popularity, indicated by inbound links and traffic, which signals that its content is valuable and should be kept fresh in the index.1 Similarly, the frequency of content updates and the staleness of existing content also play a significant role in determining how often Google’s systems desire to re-crawl a site.1 When Google’s crawl capacity and crawl demand are considered together, they define the precise crawl budget for a site, and a low crawl demand can lead to less crawling, even if the site’s servers could handle more.1

While the principles of crawl budget apply to all websites, the necessity for maximizing your crawl budget is not universal. For most small-to-medium sites—defined by Google as having fewer than a few thousand URLs with new content published weekly—a dedicated focus on crawl budget is often unnecessary.4 Google’s systems are typically able to crawl and index new content quickly without issue. However, for very large and dynamic sites, such as e-commerce platforms with millions of products, news publishers with constantly changing content, or sprawling enterprise websites, crawl budget management is a crucial strategic discipline.4

A mismanaged crawl budget on such a site creates a significant lost opportunity cost. When Googlebot’s limited crawl resources are expended on low-value, irrelevant, or duplicate URLs, it means those resources are not being spent on high-value, revenue-driving pages.4 This can lead to critical business problems, such as delays in the indexing of new products or articles, or high-value content becoming stale in search results. A common symptom of this is a significant number of “Discovered – currently not indexed” URLs in Google Search Console, which can indicate that the site has a backlog of pages that Google has found but has not yet had the resources to crawl and index.6 The resources “wasted” on unimportant pages represent a direct loss of potential visibility and organic traffic, underscoring the importance of directing Google’s attention to the content that matters most.7

The Enemies of Efficiency: Diagnosing Crawl Budget Waste and Maximizing Your Crawl Budget

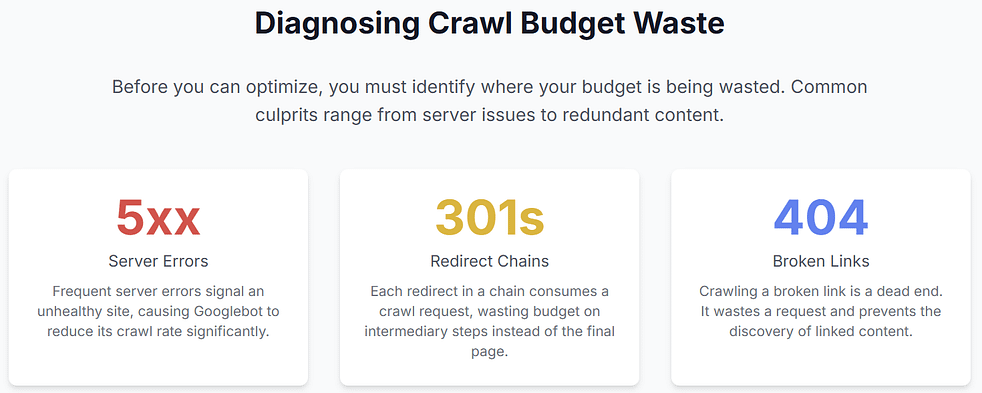

Before a site’s crawl budget can be optimized, the underlying issues causing its waste must be identified. These issues can be broadly categorized into technical and server-side obstacles, and content-related or structural inefficiencies. A single issue can be problematic, but it is often the cumulative, interlocking nature of multiple minor problems that creates a critical crawl budget deficit.

Identifying Technical & Server-Side Obstacles

A high-performance technical foundation is a prerequisite for a healthy crawl budget. One of the most common issues is a slow server response time, also known as Time to First Byte. A server that is slow to respond to requests is a direct signal for Googlebot to slow its crawl rate, as the system wants to avoid causing server overload.1 If the site’s host consistently encounters errors, such as DNS resolution or server connectivity issues, Googlebot may scale back its crawling significantly.9 Similarly, the size and speed of a page’s resources are critical. Every URL that Googlebot crawls—including HTML, CSS, JavaScript, and image files—counts against the crawl budget.1 Unoptimized, large files increase download time and place an unnecessary burden on the crawl capacity.5

Long redirect chains are another significant technical impediment to crawl efficiency.1 A chain of redirects, where URL A redirects to URL B, which then redirects to URL C, forces Googlebot to make multiple requests to access a single piece of content. This process wastes valuable crawl budget and can slow down the overall crawling process.1 Eliminating these chains by redirecting all legacy URLs directly to their final destination can significantly improve crawl efficiency.

Auditing Content-Related and Structural Inefficiencies

The most common source of crawl budget waste is redundant or low-value content. Duplicate content—whether from different URLs for the same product, tracking parameters, or mixed-protocol URLs—can confuse Googlebot and force it to spend valuable time crawling redundant pages.1 Google explicitly warns that a large inventory of duplicate or unimportant URLs “wastes a lot of Google crawling time”.1

Beyond simple duplicates, many large websites suffer from “infinite space” URLs. These are dynamically generated URLs created by faceted navigation, on-site search filters, or user tracking parameters that can combine to create a nearly endless number of unique URLs.6 This problem can overwhelm a site’s crawl budget, as Googlebot attempts to crawl every URL it discovers, regardless of its value.3 The result is a crawl budget consumed by a myriad of low-value, non-indexable pages.

Finally, a poor site structure can create “orphaned pages,” which are URLs that are not linked to from any other page on the site. Without an internal link, Googlebot can only find these pages if they are included in an XML sitemap.7 Similarly, broken links (404 errors) waste crawl budget on dead ends, hindering Google’s ability to discover new content and navigate the site efficiently.11 A site with a slow server, a few hundred orphaned pages, and thousands of duplicate URLs from faceted navigation creates a perfect storm of inefficiency. The server is throttled, and the crawl demand is diluted by redundant content, leading to a critical failure in the site’s ability to get its most important content indexed promptly.

The Expert’s Toolkit: How to Uncover and Measure Crawl Budget Issues

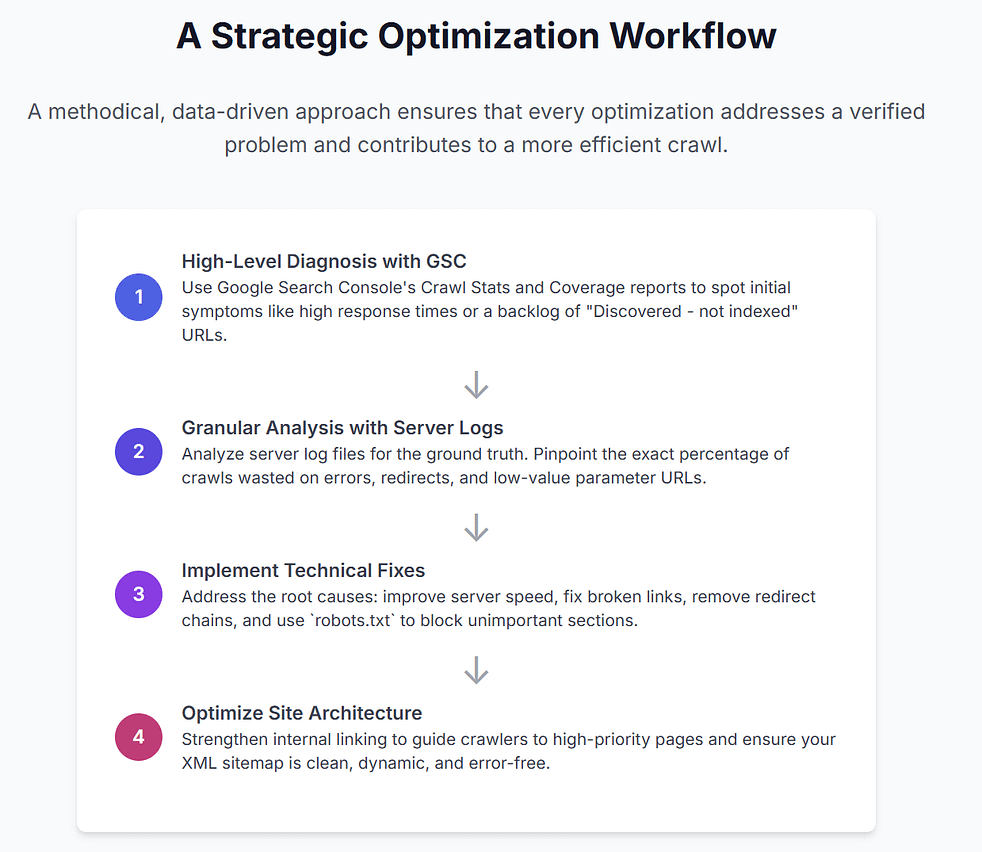

To move from identifying potential symptoms to diagnosing root causes, an expert SEO relies on a strategic hierarchy of diagnostic tools. The journey begins with high-level, aggregate data and progresses to granular, first-party data.

Leveraging Google Search Console for Core Insights on maximizing your crawl budget

Google Search Console (GSC) provides a suite of native tools for initial crawl budget diagnosis. The Crawl Stats report offers a high-level overview of how Googlebot is interacting with a site over the last 90 days. It provides metrics on total crawl requests, total download size, and average response time.9 Monitoring this report can reveal sudden dips in crawl requests or consistently high average response times, which are key indicators of a problem with crawl capacity and act as barriers in maximizing your crawl budget.9

The Index Coverage report is another critical diagnostic tool. A significant number of URLs listed as “Discovered – currently not indexed” is often the first and most prominent signal of a crawl budget issue.6 This report can also identify server errors, soft 404s, and pages that are intentionally excluded but are still being crawled.1 For a more granular view, the URL Inspection Tool can be used to deep-dive into the crawl and index status of a specific URL, providing detailed information on how Google last crawled it, including any crawl allowance or canonicalization issues.13

The following table provides a quick reference for using GSC reports to diagnose crawl issues and maximizing your crawl budget.

GSC Report | What It Reveals About Crawl Budget | Potential Issues | Recommended Action |

Crawl Stats | Crawl rate, response time, server health. | Server overload, slow page speed. | Check host status, optimize images & code. |

Index Coverage | Number of indexed/non-indexed pages. | Backlog of un-indexed pages, server errors. | Fix server issues, address soft 404s. |

URL Inspection | Per-URL crawl status and errors. | Crawl blocked by robots.txt, noindex tag. | Use robots.txt tester, remove blocking rules. |

The Gold Standard: Advanced Server Log File Analysis

For large, complex sites, the most definitive source of truth is a server log file analysis.8 While GSC provides aggregate data, server logs record every single request made to a website, providing unfiltered, first-party data on Googlebot’s behavior.16 This includes the exact URLs being crawled, the user agent of the bot, the timestamp of the request, and the HTTP status code of the server’s response.15

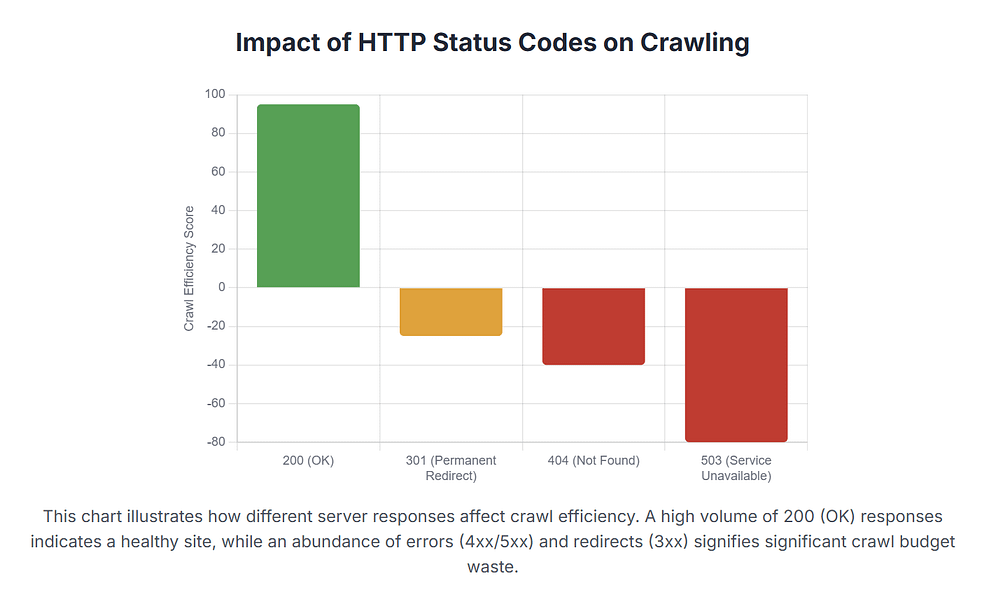

A thorough log analysis can precisely quantify crawl budget waste and help in maximizing your crawl budget. By analyzing the distribution of HTTP status codes, an SEO can determine exactly what percentage of crawl requests are being “wasted” on 404 errors, 5xx server errors, or long redirect chains.15 Log files can also reveal if a disproportionate number of crawls are being spent on low-value pages from faceted navigation or internal search results.16 For large-scale analysis, specialized tools like Botify and Lumar are essential as they can process massive log files to provide actionable insights into bot activity patterns and crawl efficiency.8

The following table details the impact of common HTTP status codes on a site’s crawl budget.

Status Code | Name | Effect on Crawl Budget | Recommended Action |

200 | OK | Efficient, signals a healthy page. | Continue to update and optimize. |

301, 302 | Redirects | Wastes crawl requests in a chain. | Use 301 permanent redirects, eliminate chains. |

404 | Not Found | Wastes a crawl request on a dead end. | Fix broken links, implement 301 redirects to a relevant page. |

410 | Gone | A strong signal to Google not to crawl again. | Use for permanently removed pages. |

5xx | Server Error | Causes Google to scale back crawling. | Check server health, address server-side issues. |

The process of diagnosis is a methodical one but the sole aim remains of maximizing your crawl budget. A high-level signal in GSC, such as a large number of “Discovered – currently not indexed” pages, should be treated as a hypothesis.6 This hypothesis must then be validated with the definitive data from server log files.16 This methodical, evidence-based approach is what separates an expert from a novice; it ensures that every optimization is data-backed and addresses a verified problem.

The Strategic Blueprint: Executing a Crawl Budget Optimization Plan

With a clear diagnosis, a site can implement a multi-faceted optimization plan that addresses server health, crawl directives, and site architecture.

The Technical Foundation: A High-Performance Environment

The most impactful optimization is to create a fast and stable technical environment. A faster website allows Googlebot to fetch and render more pages in the same amount of time, which can organically increase the crawl capacity limit.1 This is directly tied to Core Web Vitals and overall server response time. Upgrading hosting, using a Content Delivery Network (CDN) to reduce latency, and compressing images and other files are foundational steps in this process.11 A stable server that returns a high percentage of 200 (OK) status codes is a direct signal to Google that the site is healthy and can handle more crawl requests.9

Guiding Google with Directives: The Art of Crawl Control

Crawl budget can be managed directly by using specific directives to tell Googlebot what to prioritize. The robots.txt file is the primary tool for this. It should be used to strategically disallow crawling of pages or resources that have no value for search, such as private admin pages, dynamically generated internal search results, or endless faceted navigation URLs.1 Google explicitly advises using robots.txt to block pages for the long term, rather than using it as a temporary measure to reallocate crawl budget.1

A crucial distinction must be made between a disallow directive and a noindex tag. A disallow in robots.txt prevents Googlebot from even crawling a page, thereby saving crawl budget. A noindex tag, however, is a meta directive that the bot must discover by first crawling the page, which wastes crawl resources.1 For pages that have duplicate content but must remain accessible to users, a canonical tag is the correct solution. Canonicalization consolidates the ranking signals from multiple versions of a page onto a single preferred URL, directing Googlebot to the most important version of the content.3

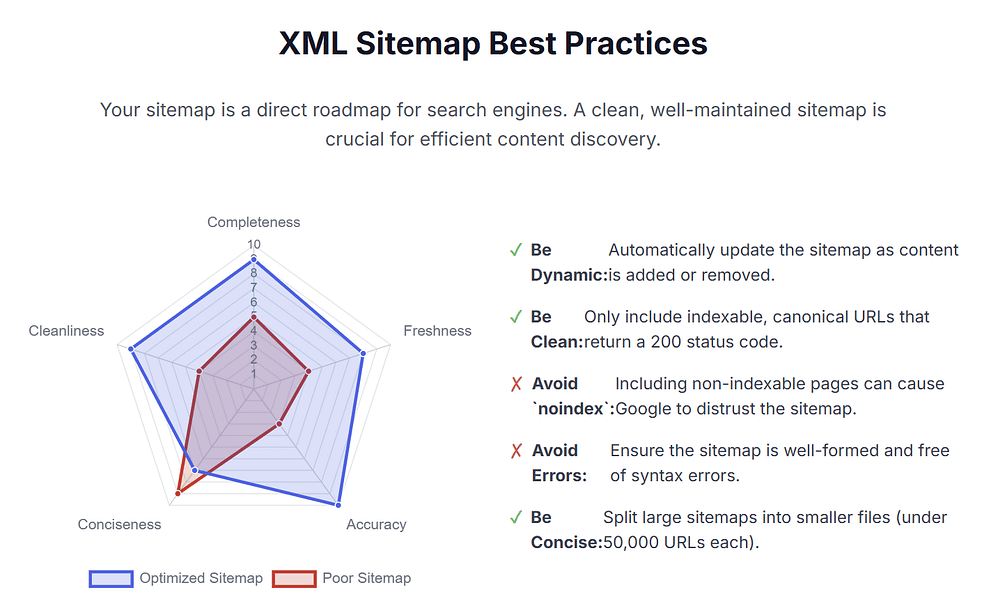

The Sitemap as a Strategic Roadmap

The XML sitemap serves as a critical roadmap, guiding search engines to all the important pages on a site.3 A well-maintained sitemap ensures that Googlebot can discover new and updated content efficiently, especially pages that may be deep within the site structure or have no internal links pointing to them.22

The following table provides a checklist for ensuring a sitemap is optimized for crawl budget.

Action | Rationale |

Use Dynamic Sitemaps 24 | Ensures the sitemap always accurately reflects the current site. |

Avoid noindex URLs 24 | Including them can confuse bots and cause the entire sitemap to be ignored. |

Limit File Size 24 | Google’s limit is 50,000 URLs per sitemap; splitting large sitemaps prevents URLs from being missed. |

Ensure it’s Error-Free 24 | An error-free sitemap improves crawl efficiency and prevents indexing problems. |

Submit to GSC 22 | Ensures efficient crawling and provides monitoring for indexing issues. |

Architecting for Discovery: Internal Linking & Site Structure

The most impactful way to influence crawl demand is through a logical site architecture and a robust internal linking structure. The “pyramid” site structure, where the homepage links to key category pages, which then link to individual subpages, makes it easy for Googlebot to navigate and understand the hierarchy of the site.10

Internal links act as a direct map for crawlers, passing link equity and guiding them to high-priority pages.11 Ensuring that important pages are only a few clicks away from the homepage helps direct Google’s attention to the most valuable content.20 A proactive strategy involves identifying and linking to “orphaned pages”—those without any internal links—to ensure they are discoverable and can receive crawl budget.7

Crawl budget optimization is not an isolated technical task but is, in fact, a symbiotic relationship with fundamental SEO practices. The very actions required to optimize it—improving site speed, cleaning up duplicate content, and strengthening internal links—simultaneously improve user experience and build authority. This creates a powerful positive feedback loop: a technically clean and well-structured site is easier for Google to crawl, which leads to a greater crawl budget, which in turn results in faster indexing and better organic visibility.

Advanced Crawl Management for Enterprise and High-Volume Sites

For enterprise-level websites with millions of pages and complex technical requirements, crawl budget optimization requires a more sophisticated approach. The focus shifts to advanced crawl management to ensure that every crawl request is spent on the highest-value content. This involves a constant prioritization of pages and the systematic removal or management of low-value content.20 For example, in an e-commerce context, this may involve updating or removing outdated product pages to ensure that crawl budget is focused on in-stock, revenue-generating items.

Specialized tools are often necessary to address unique technical challenges. For sites that rely heavily on JavaScript for dynamic content rendering, services like Prerender.io can ensure that crawlers can efficiently access and index all content without wasting time on rendering processes.19 Tools like Botify excel in providing a granular analysis of log files for enterprise-scale sites, offering predictive models and real-time monitoring that can quantify crawl budget waste and identify areas for improvement with precision.17

Ultimately, the goal of strategic crawl management is to transform a technical metric into a driver of business growth. By ensuring that Google’s resources are dedicated to the most valuable pages, a business can achieve faster indexing of new products or content, ensure content freshness, and increase the likelihood of those pages ranking. This reduced time-to-market for content and improved search visibility directly translates to a tangible increase in organic traffic and revenue, demonstrating the powerful impact of this advanced SEO discipline.19

Conclusion: A Final Word on Strategic Crawl Management

Crawl budget is a strategic, not just a technical, consideration. It is the core mechanism by which Google allocates its attention to a website, and a mismanaged budget on a large scale can have a direct and negative impact on a business’s organic performance. The key to maximizing this resource lies in a methodical, data-driven approach that begins with a clear diagnosis using tools like Google Search Console and, for larger sites, server log file analysis.

The most effective strategies for increasing and optimizing crawl budget are not simple hacks but are, in fact, foundational SEO practices. By improving a site’s technical health, streamlining its content architecture, and clearly signaling to Google which pages are the most important, a site can ensure that crawlers are both able and willing to explore its most valuable content.1 In the end, a site with an excellent user experience, robust technical performance, and a clear content hierarchy is a site that Google will naturally want to crawl more often, leading to faster indexing, higher rankings, and sustained organic growth.

Frequently Asked Questions

1. What is crawl budget and why is it important for my website?

Crawl budget refers to the number of pages search engine bots (like Googlebot) can and will crawl on your website. It’s determined by a combination of the bot’s “crawl capacity” (how many requests your server can handle) and “crawl demand” (how popular and fresh your content is). Optimizing your crawl budget ensures that search engine bots efficiently find and index your most important and newly updated content, leading to better search visibility.

2. How can I check my website’s crawl budget?

The primary tool for monitoring your crawl budget is the “Crawl Stats” report within Google Search Console. This report provides data on the total number of pages crawled per day, the average response time of your server, and the types of content being crawled. For a more detailed analysis, you can also examine your server log files to see which pages are being crawled, by which bots, and how frequently.

3. What are the main factors that waste crawl budget?

Common factors that can waste your crawl budget include:

- Technical Issues: Slow server response times (over 200ms), redirect chains, and broken links (404 errors).

- Low-Quality Content: URLs with little to no unique value, duplicate content, and outdated pages.

- Poor Site Structure: Orphaned pages that are not linked internally, and faceted navigation that creates an overwhelming number of parameter-based URLs.

4. How do XML sitemaps help with crawl budget optimization?

An XML sitemap acts as a roadmap for search engines. By submitting a clean, well-structured sitemap to Google Search Console, you can guide crawlers to your most important pages, ensuring they are discovered and indexed efficiently. Best practices include only listing canonical URLs, using dynamic sitemaps, and keeping them free of errors or broken links.

5. How often should I check my crawl budget and make optimizations?

For large or frequently updated websites (e.g., news sites, e-commerce stores), it’s advisable to monitor crawl stats weekly to spot any sudden changes in crawl activity. For smaller websites, a monthly or quarterly review is generally sufficient. The key is to address technical issues and content quality proactively, as consistent site health is the most effective long-term strategy for maintaining a healthy crawl budget.

Works cited

- Crawl Budget Management For Large Sites | Google Search Central …, accessed on August 18, 2025, https://developers.google.com/search/docs/crawling-indexing/large-site-managing-crawl-budget

developers.google.com, accessed on August 18, 2025, https://developers.google.com/search/docs/crawling-indexing/large-site-managing-crawl-budget#:~:text=The%20amount%20of%20time%20and,after%20it%20has%20been%20crawled.

- Managing Crawl Budget: A Guide – WP-CRM System, accessed on August 18, 2025, https://www.wp-crm.com/crawl-budget/

- Crawl Budget Demystified: Optimizing Large Sites – SeoTuners, accessed on August 18, 2025, https://seotuners.com/blog/seo/crawl-budget-demystified-optimizing-large-sites/

- Crawl budget for SEO: the ultimate reference guide – Conductor, accessed on August 18, 2025, https://www.conductor.com/academy/crawl-budget/

- Crawl Budget Optimization: How to Improve Crawl Efficiency | Uproer, accessed on August 18, 2025, https://uproer.com/articles/crawl-budget-optimization/

- How to Optimize Your Crawl Budget: Insights From Top Technical SEO Experts – Sitebulb, accessed on August 18, 2025, https://sitebulb.com/resources/guides/how-to-optimize-your-crawl-budget-insights-from-top-technical-seo-experts/

- How do you find out if your website has a crawl budget issue? : r/bigseo – Reddit, accessed on August 18, 2025, https://www.reddit.com/r/bigseo/comments/1cb1375/how_do_you_find_out_if_your_website_has_a_crawl/

- How to use Google’s Crawl Stats Report for Technical SEO – Huckabuy, accessed on August 18, 2025, https://huckabuy.com/how-to-use-googles-crawl-stats-report-for-technical-seo/

- Technical SEO Crawlability Checklist – HubSpot Blog, accessed on August 18, 2025, https://blog.hubspot.com/marketing/technical-seo-guide/crawlability-checklist

- 13 Steps To Boost Your Site’s Crawlability And Indexability, accessed on August 18, 2025, https://www.searchenginejournal.com/crawling-indexability-improve-presence-google-5-steps/167266/

- Your Guide to Understanding Technical SEO – Abstrakt Marketing Group, accessed on August 18, 2025, https://www.abstraktmg.com/what-is-technical-seo/

- How to Fix Crawl Errors Using GSC | Quattr Blog, accessed on August 18, 2025, https://www.quattr.com/search-console/fix-crawl-errors-using-gsc

- URL Inspection Tool – Search Console Help, accessed on August 18, 2025, https://support.google.com/webmasters/answer/9012289?hl=en

- Understanding Log File Analysis and How to Do It for SEO? – Search Atlas, accessed on August 18, 2025, https://searchatlas.com/blog/log-file-analysis/

- Log File Analysis for SEO: An Introduction – Conductor, accessed on August 18, 2025, https://www.conductor.com/academy/log-file-analysis/

- Tracking AI Bots on Your Site with Log File Analysis | Botify, accessed on August 18, 2025, https://www.botify.com/blog/tracking-ai-bots-with-log-file-analysis

- Use log analysis to improve your SEO – Semji, accessed on August 18, 2025, https://semji.com/blog/use-log-analysis-to-improve-your-seo/

- 7 Advanced Crawl Budget Optimization Companies in 2025: Expert Rankings & Analysis, accessed on August 18, 2025, https://www.singlegrain.com/seo/7-advanced-crawl-budget-optimization-companies-in-2025-expert-rankings-analysis/

- Understanding Crawl Budget Optimization — Here’s Everything You …, accessed on August 18, 2025, https://blog.hubspot.com/website/crawl-budget-optimization

- How to get Googlebot to crawl my website faster to pick up my new meta description, accessed on August 18, 2025, https://webmasters.stackexchange.com/questions/115111/how-to-get-googlebot-to-crawl-my-website-faster-to-pick-up-my-new-meta-descripti

- Crawlability & Indexability: What They Are & How They Affect SEO, accessed on August 18, 2025, https://www.semrush.com/blog/what-are-crawlability-and-indexability-of-a-website/

- Beyond SEO: Googlebot Optimization, accessed on August 18, 2025, https://neilpatel.com/blog/googlebot-optimization/

- Sitemap Best Practices & SEO Benefit | Symphonic Digital, accessed on August 18, 2025, https://www.symphonicdigital.com/blog/html-sitemap-seo-benefits-best-practices