Introduction: Unlocking Deeper SEO Insights with Custom Extraction

The digital landscape is in constant flux, and for any business striving for #PageOne dominance, understanding and leveraging structured data has transitioned from an optional enhancement to a fundamental necessity. Structured data is no longer a mere suggestion; it stands as a critical component for enhancing visibility in Search Engine Results Pages (SERPs). It empowers search engines to understand content contextually, enabling rich snippets, knowledge panel entries, and advanced voice search capabilities. Its proper implementation directly influences how a website’s content is interpreted and presented to users, making its accurate deployment and subsequent auditing paramount for digital success.

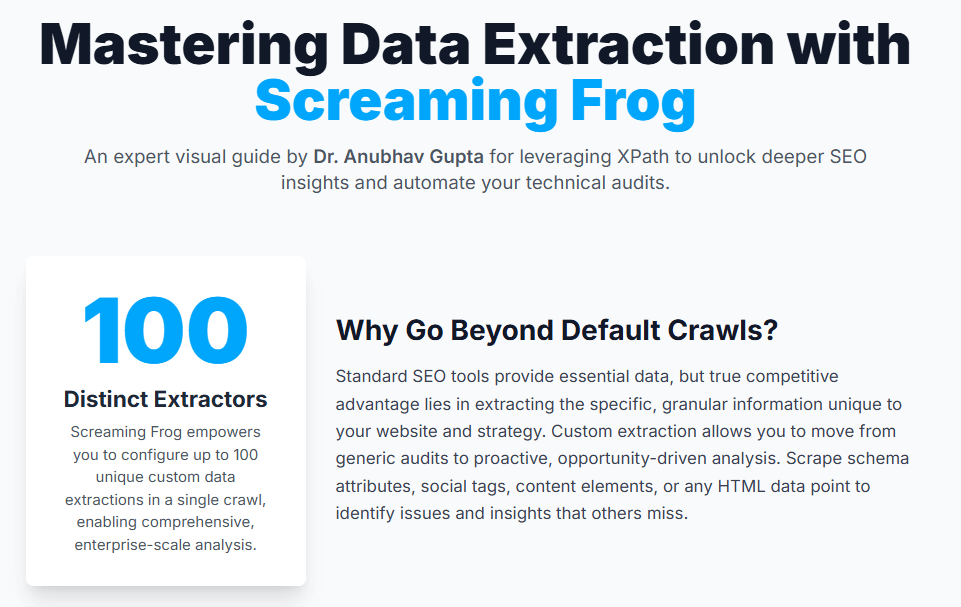

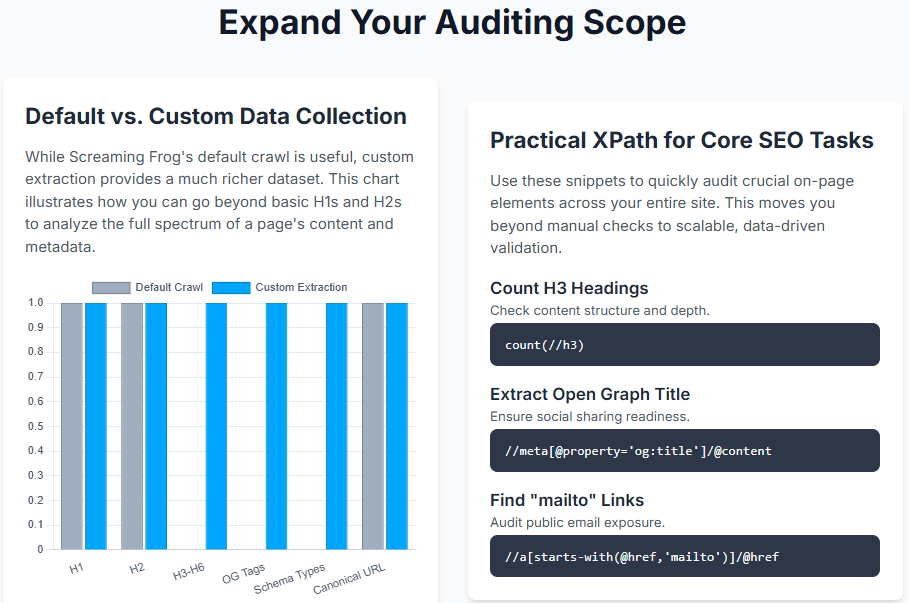

For technical SEO professionals, the Screaming Frog SEO Spider is widely recognized as an enterprise-scale technical crawl tool. Its custom extraction feature transcends standard crawl data, offering unparalleled flexibility by allowing SEOs to scrape virtually any data from the HTML of a web page using XPath, CSSPath, and regex.2 This capability facilitates customizable advanced analytics and the collection of all data from the HTML , even from pages rendered with JavaScript.3 This is crucial for identifying specific, granular issues or opportunities that predefined metrics or basic crawls might overlook. The ability to collect highly specific data points, such as all H3s, specific schema attributes for authors or products 2, transforms an SEO audit from a reactive problem-finding exercise into a proactive, opportunity-driven analysis. This empowers SEOs to anticipate potential issues, identify granular optimization opportunities, and build more robust, future-proof strategies, moving beyond mere problem identification to strategic foresight. This approach is central to developing robust, future-proof strategies and anticipating market shifts.

At the heart of this advanced data retrieval lies XPath. XPath, or XML Path Language, is a robust query language specifically designed for selecting nodes from an XML-like document, such as HTML.2 It provides highly precise control over which data elements are extracted, including specific attributes.2 It is generally recommended for most common scenarios and capable of extracting any HTML element of a webpage.2 While CSSPath offers a quicker alternative in some cases, XPath’s advanced querying capabilities make it indispensable for complex extractions. The scalability of Screaming Frog’s custom extraction means SEOs are no longer constrained by manual checks or limited predefined reports. This raw, structured data becomes the bedrock for advanced analyses, automated reporting 7, and even sophisticated AI integrations.7 This capability directly supports the scaling of SEO impact across large websites or multiple client projects, reinforcing the philosophy of combining data-driven insights with creative content strategies to deliver consistent, measurable results.

I. Demystifying Screaming Frog’s Custom Extraction Feature

Custom Extraction is a highly versatile feature within the Screaming Frog SEO Spider that allows users to scrape any data from the HTML of a web page.2 This extends the tool’s utility far beyond its default crawl metrics, offering unparalleled flexibility for in-depth technical SEO audits. It is described as a very powerful feature that allows the collection of all data from the HTML of any web page (text-only mode) or data rendered with the JavaScript Rendering Mode scan mode.3 This means it can effectively handle the complexities of modern, dynamically loaded websites. Its strategic importance lies in its ability to uncover specific, granular information crucial for advanced SEO, such as identifying missing schema implementations, inconsistent social meta tags, specific content elements, or even unique attributes not captured by standard reports.2

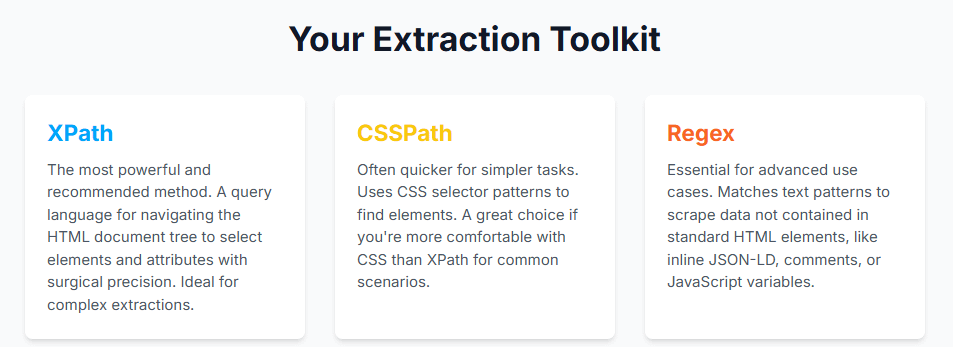

Screaming Frog offers three primary methods for custom data extraction, each suited for different scenarios:

- XPath: This is a query language for selecting nodes from an XML-like document, such as HTML.2 It is recommended for most common scenarios and for extracting any HTML element.2 XPath offers precise control, including the selection of specific attributes.

- CSSPath: In CSS, selectors are patterns used to select elements and are often the quickest out of the three methods available. Like XPath, it is suitable for most common scenarios, and users can choose the method they are most comfortable using.

- Regex (Regular Expressions): A special string of text used for matching patterns in data. Regex is essential for anything that is not part of an HTML element, for example, any JSON found in the code. It is best for advanced uses, such as scraping HTML comments or inline JavaScript , and particularly useful for extracting structured data formatted in JSON-LD.3

Beyond selecting the extraction method, understanding the “Extraction Filter” options is equally critical as these determine what specific part of the selected HTML element will be extracted 2:

- Extract HTML Element: This option extracts the selected element and all of its inner HTML content.2 This is useful for capturing the full HTML structure, including tags and attributes.

- Extract Inner HTML: This extracts the inner HTML content of the selected element.2 This excludes the outer tag but includes any nested HTML elements.

- Extract Text: This collects the text content of the selected element and the text content of any sub-elements.2 This is ideal for extracting plain text, stripping out all HTML tags for cleaner data.

- Function Value: This returns the result of the supplied function, for example, count(//h1) to find the number of h1 tags on a page.2 This is indispensable for XPath functions that return a numerical or boolean value rather than an element.

The true power and efficiency of custom extraction emerge from understanding the synergy between the chosen extraction method and the filter. For instance, using XPath to target a specific meta tag’s content attribute 2 is far more efficient and precise than extracting the entire HTML element and then manually parsing it. Similarly, employing

Function Value with count(//h3) 2 directly provides a critical metric, bypassing the need to extract all H3s and count them externally. This sophisticated understanding of combining methods and filters is a hallmark of expert-level data retrieval, moving beyond mere collection to intelligent, purpose-driven data acquisition.

A crucial consideration for modern web scraping is the necessity of JavaScript rendering. Screaming Frog primarily extracts data from static HTML.4 However, many modern websites rely heavily on client-side rendering for their content. The tool addresses this by allowing data collection from content rendered with the ‘Javascript Rendering Mode’ scan mode.3 This seemingly small detail is profoundly significant for technical SEO in today’s web landscape. Without enabling JavaScript rendering, custom extractions on dynamic websites might miss critical content, structured data, or links that are only visible after JavaScript execution. This leads to incomplete or inaccurate audit data, creating blind spots in the analysis. An expert approach to data extraction must account for the rendering environment, ensuring that the crawler perceives the page as a user (and search engine) would. This proactive measure prevents critical data omissions and aligns with cutting-edge digital strategy frameworks and adapting to the ever-changing digital landscape.

II. Step-by-Step: Setting Up Your Custom Extractions

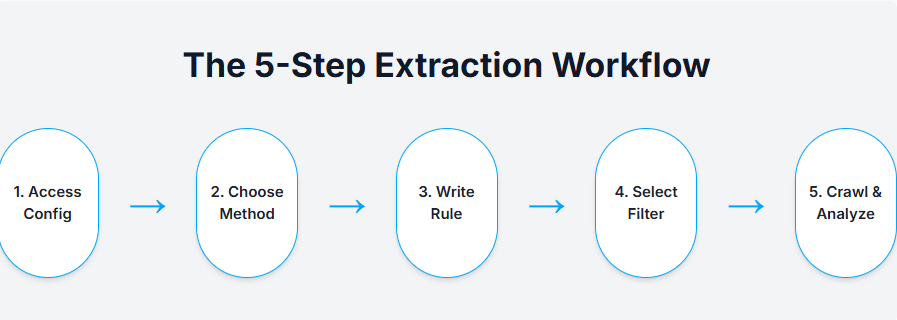

The journey to custom data extraction begins by navigating to Configuration > Custom > Extraction within the Screaming Frog SEO Spider interface.2 This action opens the Custom Extraction window, which is capable of accommodating up to 100 distinct custom data extraction requests.4

For those less familiar with writing XPath or CSSPath expressions, the “Visual Custom Extraction” feature is an invaluable starting point. To use it, simply click the ‘browser’ icon adjacent to the extractor field, enter the target URL, and then visually select the element on the page to be scraped. The SEO Spider will intelligently highlight the area on the page, create a variety of suggested expressions, and provide a preview of what will be extracted. This significantly simplifies the process of identifying the correct XPath or CSSPath. Users can also navigate to other pages within this internal browser by holding Ctrl and clicking a link.

For advanced users or when visual selection is impractical, manual input offers superior precision. This involves selecting “XPath” as the extraction method and directly inputting the XPath expression into the Rule field.2 A common initial step is to use a browser’s “Inspect Element” feature (e.g., Chrome’s DevTools) to right-click ‘inspect element’ and copy the XPath or Selector provided.2 It is important to note that while this provides a starting point, the copied XPath often requires refinement for robustness and efficiency.6 The extractor ‘name’ field can also be updated, which corresponds to the column names in the final exported data.2 A green checkmark next to the input signifies a valid syntax.4 After configuring the extractor(s), click ‘OK’ or ‘Add Extractor’ to save, and then initiate the crawl by clicking ‘Crawl’.2 The scraped data will populate in real-time within the ‘Custom Extraction’ tab and also appear as a dedicated column within the ‘Internal’ tab, offering immediate visibility into the extracted information.2

The use of browser-generated XPaths is a convenient starting point, but it is a critical differentiator between a novice and an expert. While browser-generated XPaths are a convenient starting point, they are frequently absolute paths (e.g., /html/body/div/main/article/h1). Such paths are highly brittle and prone to breaking with even minor structural changes to a webpage. A true SEO expert understands the necessity of refining these into more robust, relative, or attribute-based XPaths (e.g., //h1[@class=’article-title’] or //div[@id=’content’]//p). This refinement ensures greater resilience to website updates, improved efficiency for large-scale crawls, and long-term maintainability of extraction rules.

Here is a quick-reference guide to the essential steps for configuring custom extractions:

Table 1: Essential Steps for Configuring Custom Extractions

Step | Action | Description | Key Setting/Location | Snippet Reference |

1 | Access Feature | Open the custom extraction configuration window. | Configuration > Custom > Extraction | 2 |

2 | Add Extractor | Initiate a new custom extraction rule. | Click Add | 2 |

3 | Choose Method | Select the preferred extraction method: XPath, CSSPath, or Regex. | Extraction Method dropdown | 2 |

4 | Input Expression | Enter the specific XPath, CSSPath, or Regex query. | Rule field | 2 |

5 | Select Filter | Define what part of the element to extract (HTML, Text, Function Value). | Extraction Filter dropdown | 2 |

6 | Name Extractor | Assign a descriptive column name for the extracted data in the final report. | Name field | 2 |

7 | Confirm & Crawl | Save the extractor(s) and initiate the website crawl. | Click OK or Add Extractor, then Crawl | 2 |

8 | View Data | Access the extracted data in real-time during or after the crawl. | Custom Extraction tab or Internal tab | 2 |

III. XPath for SEO: Practical Examples & Advanced Applications

XPath’s power lies in its ability to precisely target and extract specific elements from a webpage’s HTML structure. This section will explore foundational and advanced applications of XPath for various SEO auditing needs.

Foundational XPath for Common SEO Elements

By default, Screaming Frog SEO Spider only collects H1s and H2s.2 Custom extraction allows for comprehensive analysis of all heading levels (H3s, H4s, etc.), which is vital for content structure and hierarchy. For instance, to collect all H3 tags on a page, the XPath expression is

//h3. If the objective is to collect only the first H3 tag, the XPath becomes /descendant::h3. To count the total number of H3 tags on a page, the expression count(//h3) is used, which requires setting the ‘Function Value’ filter.2

Social meta tags are crucial for controlling how content appears when shared on social media platforms, directly impacting click-through rates from social channels. For example, to extract the Open Graph Title, the XPath is //meta[starts-with(@property, ‘og:title’)]/@content.2 Similarly, for the Open Graph Description, one would use

//meta[starts-with(@property, ‘og:description’)]/@content. To capture the Twitter Card Type, the XPath is //meta[@name=’twitter:card’]/@content.6

Identifying specific internal links or attributes can reveal critical insights into site architecture, internal linking strategies, and asset optimization. For instance, to extract all image source URLs, the XPath //img/@src can be used.6 To extract mailto links, useful for auditing email address exposure, the XPath is

//a[starts-with(@href,’mailto’)]/@href.6 To find links with specific anchor text, such as “click here”, the XPath

//a[contains(.,’click here’)] proves effective.6

The following table provides a quick reference for core XPath expressions commonly used in everyday SEO audits:

Table 2: Core XPath Expressions for Everyday SEO Audits

SEO Element | XPath Expression | Extraction Filter | Intended Output | Snippet Reference |

All H3 Tags | //h3 | Extract HTML Element / Extract Text | All H3 headings on a page. |

|

First H3 Tag | /descendant::h3 | Extract HTML Element / Extract Text | The first H3 heading on a page. |

|

Count of H3s | count(//h3) | Function Value | Number of H3 tags on a page. | 2 |

Open Graph Title | //meta[starts-with(@property, ‘og:title’)]/@content | Extract Text | Content of the Open Graph title meta tag. | 2 |

Twitter Card Type | //meta[@name=’twitter:card’]/@content | Extract Text | Content of the Twitter Card type meta tag. | 6 |

All Image SRCs | //img/@src | Extract Text | URLs of all images on a page. | 6 |

Mailto Links | //a[starts-with(@href,’mailto’)]/@href | Extract Text | All email links found on a page. | 6 |

Unlocking Structured Data with XPath

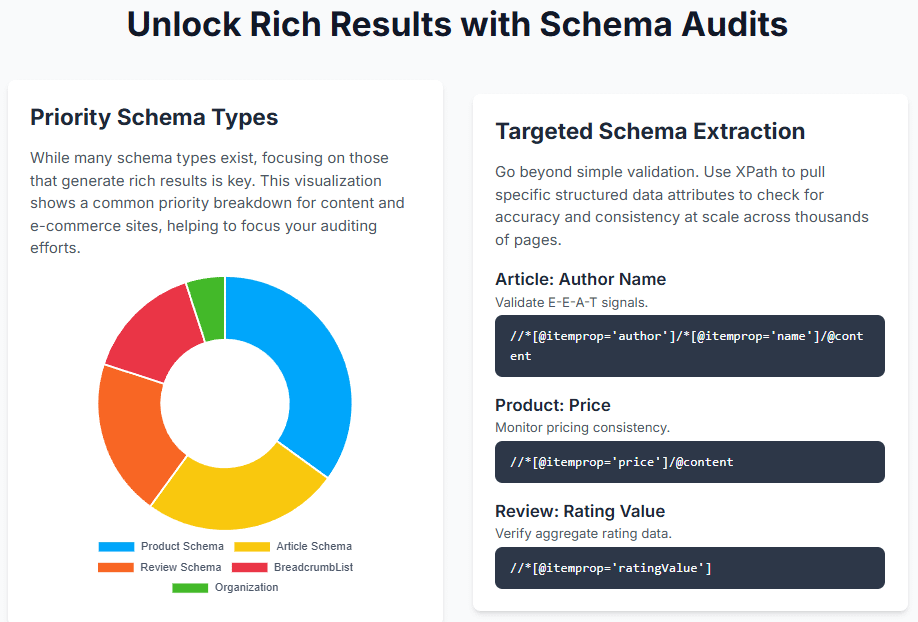

While Screaming Frog offers a built-in structured data tab, custom extraction provides the flexibility to pull specific attributes or validate implementation details not covered by default reports. To extract all schema types (itemtype) present on a page, the XPath is //*[@itemtype]/@itemtype.2 Similarly, to extract all

itemprop values, //*[@itemprop]/@itemprop is used.

For content-heavy sites, Article Schema is vital, providing search engines with critical information. To extract the Author Name, the XPath //*[@itemprop=’author’]/*[@itemprop=’name’]/@content is used.6 For the Publish Date, it’s

//*[@itemprop=’datePublished’]/@content 6, and for the Modified Date,

//*[@itemprop=’dateModified’]/@content.6

Practical use cases extend to various schema types:

- Product Schema: Essential for e-commerce sites to display rich results. Examples include Product Price: //*[@itemprop=’price’]/@content 6, Product Currency:

//*[@itemprop=’priceCurrency’]/@content 6, and Product Availability:

//*[@itemprop=’availability’]/@href.6 - Review Schema: Crucial for displaying star ratings and review counts in SERPs. To extract Review Count, use //*[@itemprop=’reviewCount’] 6, and for Rating Value,

//*[@itemprop=’ratingValue’].6 - Local Business/Organization Schema: Important for local SEO and knowledge panel information. Examples include Organization Name: //*[contains(@itemtype,’Organization’)]/*[@itemprop=’name’] 6, and Street Address:

//*[@itemprop=’address’]/*[@itemprop=’streetAddress’].6

The following table demonstrates the precision of XPath for various structured data types, highlighting their SEO value:

Table 3: XPath for Structured Data Extraction & Analysis

Schema Type | Data Point | XPath Expression | Extraction Filter | SEO Value | Snippet Reference |

Article | Author Name | //*[@itemprop=’author’]/*[@itemprop=’name’]/@content | Extract Text | Validate author attribution for E-E-A-T (Expertise, Experience, Authoritativeness, Trustworthiness). | 6 |

| Publish Date | //*[@itemprop=’datePublished’]/@content | Extract Text | Audit content freshness, identify stale or outdated content. | 6 |

Product | Price | //*[@itemprop=’price’]/@content | Extract Text | Monitor pricing consistency, identify pricing errors. | 6 |

| Availability | //*[@itemprop=’availability’]/@href | Extract Text | Identify out-of-stock products impacting user experience and crawl budget. | 6 |

Review | Rating Value | //*[@itemprop=’ratingValue’] | Extract Text | Verify correct display of aggregate ratings in SERPs. | 6 |

Organization | Name | //*[contains(@itemtype,’Organization’)]/*[@itemprop=’name’] | Extract Text | Ensure consistent business information for local SEO and knowledge panels. | 6 |

BreadcrumbList | All Links | //*/*[@itemprop]/a/@href | Extract Text | Map site hierarchy, identify broken or inconsistent breadcrumbs. | 6 |

Advanced XPath Functions for Complex SEO Scenarios

Beyond basic element extraction, XPath offers powerful functions for deeper analysis and in-crawl data manipulation.

The count() function is invaluable for quantifying occurrences of any element, enabling bulk analysis of content patterns.2 The

starts-with(x,y) function checks if string x begins with string y, useful for identifying specific link types like mailto links (//a[starts-with(@href, ‘mailto’)]).6 Similarly,

contains(x,y) checks if string x includes string y, which can be used to find links with specific anchor text (//a[contains(.,’click here’)]).6

The string-join() function allows for consolidating fragmented text. Its syntax is string-join(,”[delimiter]”). For example, to extract all text from <p> tags and combine them into a single string, separated by spaces, one would use string-join([//p,” “]).2 This is exceptionally useful for gathering all paragraph content into a single cell for subsequent content analysis (e.g., word count, keyword density, readability).

Applying distinct-values() is powerful for identifying unique elements. The syntax is distinct-values(). An example is distinct-values(//@class) to get a list of all unique CSS classes used on a page.2 This can be a powerful audit for identifying unused styles, which can then be removed to improve site speed. It can also be combined with

count() to identify pages with an excessive number of unique CSS classes, potentially indicating bloated HTML: count(distinct-values(//@class)).2

Conditional logic with if-then-else allows for advanced checks directly within the crawl. The syntax is if([conditional XPath]) then else ”. For instance, to check for canonical URLs on pages that are also noindexed (a common conflict), the XPath would be if(contains(//meta[@name=’robots’]/@content, ‘noindex’)) then //link[@rel=’canonical’]/@href else ‘Not noindexed’.2 This helps in quickly identifying conflicting directives. Another example is identifying potentially “thin content” by counting paragraphs and extracting the content if it falls below a threshold:

if(count(//p) < 4) then string-join(//p, ‘ ‘) else ”.2

Finally, format-dateTime() is invaluable for standardizing date formats. The syntax is format-dateTime(, ‘[Y0001]-[M01]-‘). For example, to standardize date formats from various sources (e.g., <time> tags or Open Graph data), one could use format-dateTime(//time/@datetime, ‘[Y0001]-[M01]-‘) or format-dateTime(//meta[@property=”article:published_time”]/@content, ‘[Y0001]-[M01]-‘).2 This ensures consistency for easier analysis and reporting.

These advanced XPath functions represent a significant paradigm shift in how SEOs can leverage Screaming Frog. Instead of merely pulling raw data and performing all complex analysis externally in spreadsheets or scripts, these advanced XPath functions enable “in-crawl” data pre-processing and conditional flagging. This results in cleaner, more structured, and often pre-analyzed data upon export, allowing for immediate identification of complex issues (like conflicting directives or thin content) without requiring extensive post-crawl manipulation. This dramatically increases efficiency and the speed to insight , transforming Screaming Frog into a more intelligent data pipeline rather than just a data collector.

An expert understands that while basic XPaths might suffice for one-off tasks, building robust XPaths is paramount for scalable and maintainable SEO audits. Relying on fragile positional paths (e.g., div/p) makes extractions highly susceptible to breaking with minor website design changes, leading to wasted time and unreliable data. The advanced functions and examples demonstrated implicitly guide users towards writing more resilient queries (e.g., using attributes like @class or functions like starts-with()) that are less prone to breaking. This focus on long-term effectiveness and future-proof strategies 10 is a key differentiator for an expert-level approach to data extraction.

Table 4: Advanced XPath Functions for Complex SEO Scenarios

Function | Syntax | Example XPath | SEO Use Case | Snippet Reference |

string-join() | string-join(, “[delimiter]”) | string-join(//p, ‘ ‘) | Consolidate all paragraph text on a page into a single string for content analysis (e.g., word count, keyword density). | 2 |

distinct-values() | distinct-values() | distinct-values(//@class) | Identify all unique CSS classes used on a page, aiding in auditing unused styles and improving site speed. | 2 |

if-then-else | if([conditional XPath]) then else ” | if(contains(//meta[@name=’robots’]/@content, ‘noindex’)) then //link[@rel=’canonical’]/@href else ‘Not noindexed’ | Automatically flag pages with conflicting noindex tags and canonical URLs for immediate resolution. | 2 |

count() with condition | if(count(//p) < 4) then string-join(//p, ‘ ‘) else ” | if(count(//p) < 4) then string-join(//p, ‘ ‘) else ” | Identify and extract content from potentially “thin” pages (e.g., fewer than 4 paragraphs) for content review. | 2 |

format-dateTime() | format-dateTime(, ‘[Y0001]-[M01]-‘) | format-dateTime(//meta[@property=”article:published_time”]/@content, ‘[Y0001]-[M01]-‘) | Standardize date formats extracted from various sources (e.g., Open Graph, Schema) for consistent analysis. | 2 |

IV. From Data to Decisions: Analyzing Your Extractions

Once the crawl is complete, the scraped data starts appearing in real-time during the crawl, under the ‘Custom Extraction’ tab, as well as the ‘Internal’ tab.2 This immediate feedback allows for quick verification of the extracted information. Users can then export that data by clicking the export button.5 The data can be exported into various spreadsheet formats, most commonly CSV or Excel, making it ready for further analysis.7

After exporting, powerful spreadsheet tools like Microsoft Excel or Google Sheets become indispensable for initial data manipulation and analysis.7 While Google Sheets offers a basic

ImportHtml function for simple table scraping 11, Screaming Frog’s custom extraction provides a far more flexible and comprehensive data collection mechanism.

Pivot tables are an exceptionally powerful feature within spreadsheet software for summarizing, reorganizing, and analyzing large datasets extracted by Screaming Frog.12 They allow SEOs to dynamically split filter data, define columns, rows, and values for calculation, transforming raw data into digestible information.12

For example, using extracted H3 counts (derived from count(//h3) XPath), a pivot table can group URLs by their H3 count. This quickly highlights pages with zero or very few H3s, indicating potential content structure issues, readability problems, or even thin content.2 Another powerful application involves using extracted schema types or product availability data. A pivot table can reveal the distribution of specific schema types across a site or identify all pages where a product is marked as “out of stock.” This aids in auditing schema consistency, identifying business-critical issues, and ensuring rich snippet eligibility.6 This transformation of raw extracted data into actionable intelligence is a crucial step in technical SEO. By presenting a hypothetical raw dataset from a crawl and then illustrating how a pivot table would summarize and interpret this data for strategic decision-making, the practical value of custom extraction becomes evident. This bridges the crucial gap between data collection and strategic action, visually demonstrating how a seemingly simple metric, like the count of H3s, when aggregated and analyzed through a pivot table, can reveal a significant site-wide content structuring problem. This moves the discussion beyond merely having data to actively interpreting it for tangible SEO recommendations.

Consider the following hypothetical extracted data for H3 counts across a website:

Table 5: Example Data Analysis with Extracted Headings

URL | H3 Count (Raw Data) |

5 | |

0 | |

3 | |

0 | |

7 | |

2 | |

0 | |

4 |

Applying a pivot table to this raw data, with ‘H3 Count’ as the row and ‘Count of URLs’ as the value, grouped by ranges (e.g., 0, 1-3, 4+), could yield:

H3 Count Range | Number of URLs |

0 | 3 |

1-3 | 2 |

4+ | 3 |

This summarized data immediately highlights that 3 out of 8 sampled URLs have no H3 headings, indicating a significant content structuring deficiency that requires attention for readability and SEO.

Conclusion

Screaming Frog’s custom extraction feature, particularly when wielded with the precision of XPath, transforms the SEO Spider from a mere crawler into a sophisticated data analysis powerhouse. The ability to define and extract virtually any data point from a webpage’s HTML, including intricate structured data elements and social meta tags, empowers SEO professionals to move beyond generic audits. This capability facilitates a shift from reactive problem-solving to proactive, strategic analysis, anticipating market shifts and identifying granular optimization opportunities before they escalate into major issues.

The strategic combination of extraction methods and filters, coupled with the critical consideration of JavaScript rendering, ensures that the collected data is not only comprehensive but also highly accurate and relevant for modern web environments. Furthermore, the application of advanced XPath functions for in-crawl data pre-processing, such as string-join() for content consolidation or if-then-else for conditional flagging, significantly enhances efficiency and accelerates the journey from raw data to actionable intelligence. This level of analytical depth and the focus on building robust, future-proof extraction rules are hallmarks of an expert approach to technical SEO.

By mastering custom extraction and XPath, SEO professionals can unlock unparalleled insights, enabling them to make data-driven decisions that lead to consistent, measurable results and ultimately drive exponential digital growth for any enterprise. This mastery is not just about collecting more data; it is about collecting the right data, in the right format, to inform the right strategies for sustained online dominance.

Frequently asked questions

1. What exactly is “Custom Extraction” in Screaming Frog, and why is it crucial for SEO?

Custom Extraction is a powerful feature in Screaming Frog that lets you define and pull specific data points from the HTML of web pages during a crawl, beyond what the tool collects by default. It’s crucial for SEO because it enables you to gather granular, unique data – like schema properties, specific content within divs, social media meta tags, or even phone numbers – at scale. This allows for deep, data-driven audits that uncover hidden issues or opportunities, moving you beyond generic reports to highly targeted optimizations. Dr. Anubhav Gupta emphasizes this as key for gaining a competitive edge.

2. When should I use XPath versus CSSPath or Regex for extraction?

XPath is generally recommended for its precision and versatility, especially for complex data points or navigating deep within the HTML structure. Use it when you need to select elements based on their attributes, text content, or position relative to other elements. CSSPath is often quicker for simpler selections if you’re comfortable with CSS selectors, like targeting elements by class or ID. Regex (Regular Expressions) is essential for extracting data that isn’t neatly contained within HTML elements, such such as inline JSON-LD script, comments, or dynamically loaded content patterns. Dr. Gupta’s approach highlights XPath as the go-to for surgical data retrieval.

3. Can I use custom extraction to audit my schema markup, and how?

Absolutely! Custom extraction, particularly with XPath, is an incredibly effective way to audit your schema markup at scale. You can write XPath expressions to target specific properties within your Microdata or RDFa schema (e.g., //*[@itemprop=’price’]/@content for product price, or //*[@itemprop=’author’]/*[@itemprop=’name’]/@content for article author). By extracting these values for thousands of pages, you can identify missing, incorrect, or inconsistent schema implementations, which is vital for securing rich results and improving your site’s visibility in SERPs.

4. What are some common challenges with XPath, and how can I troubleshoot them?

Common challenges include incorrect XPath syntax, multiple matching elements when you only want one, or dynamic HTML structures that change with each page load. To troubleshoot:

- Use your browser’s developer tools (e.g., Chrome’s Inspect Element): Right-click an element, select ‘Inspect’, then ‘Copy’ > ‘Copy XPath’ to get a starting point.

- Refine your XPath: If it’s too broad, add more specific attributes (@class, @id) or positional arguments ([1], [last()]).

- Test in Screaming Frog’s “Custom Extraction” preview: This allows you to see the results of your XPath on a single URL before a full crawl.

- Leverage XPath functions: Functions like contains(), starts-with(), or text() can help refine your selections. Dr. Gupta’s experience suggests that precise XPath is key to avoiding erroneous data.

5. How can the data extracted using Screaming Frog and XPath be used for actionable SEO insights?

The extracted data is incredibly valuable for identifying patterns, issues, and opportunities at scale. For instance:

- Content Audits: Extract H3s or word counts to find “thin content” or content needing expansion.

- Schema Validation: Bulk check schema properties for accuracy and consistency across your site.

- Internal Linking: Extract anchor text and destination URLs from specific content areas to find internal link opportunities or issues.

- Technical Health: Identify pages missing critical meta tags (like Open Graph for social sharing) or specific tracking codes.

This data can then be exported to spreadsheets and analyzed further (e.g., with pivot tables, as suggested by Dr. Gupta) to prioritize fixes and inform your SEO strategy.

Works cited

- Dr. Anubhav Gupta – Top SEO & ORM Expert India – SARK Promotions, accessed on July 12, 2025, https://www.marketingseo.in/about/copy-of-cto

- Web Scraping & Custom Extraction – Screaming Frog, accessed on July 12, 2025, https://www.screamingfrog.co.uk/seo-spider/tutorials/web-scraping/

- How to take advantage of Custom Extraction | Screaming Frog, accessed on July 12, 2025, https://screamingfrog.club/en/custom-extraction/

- Web Scraping and Custom Extraction – Screaming Frog, accessed on July 12, 2025, https://screamingfrog.club/en/web-scraping/

- Visual Custom Extraction & Web Scraping – Screaming Frog SEO Spider – YouTube, accessed on July 12, 2025, https://www.youtube.com/watch?v=nFFEZTULm48&pp=0gcJCfwAo7VqN5tD

- The Complete Guide to Screaming Frog Custom Extraction with XPath & Regex | Uproer, accessed on July 12, 2025, https://uproer.com/articles/screaming-frog-custom-extraction-xpath-regex/

- What are some advanced uses of screaming frog you are utilizing? : r/TechSEO – Reddit, accessed on July 12, 2025, https://www.reddit.com/r/TechSEO/comments/pephbe/what_are_some_advanced_uses_of_screaming_frog_you/

- Generate JSON-LD Schema at Scale With JavaScript Snippets – Screaming Frog, accessed on July 12, 2025, https://www.screamingfrog.co.uk/blog/generate-json-ld-schema-at-scale/

- Advanced Xpath Functions for the Screaming Frog SEO Spider, accessed on July 12, 2025, https://www.screamingfrog.co.uk/blog/advanced-xpath-screaming-frog/

- Dr. Anubhav Gupta – Top SEO & ORM Expert India | SARK Promotions, accessed on July 12, 2025, https://www.marketingseo.in/about/cto-dr-anubhav-gupta-seo-expert

- 4 Easy Ways to Scrape Data from a Table – Octoparse, accessed on July 12, 2025, https://www.octoparse.com/blog/scrape-data-from-a-table

- Using Excel Pivot Tables for SEO analysis | Edit – Salocin Group, accessed on July 12, 2025, https://salocin-group.com/edit/insights/using-excel-pivot-tables-for-seo-analysis/