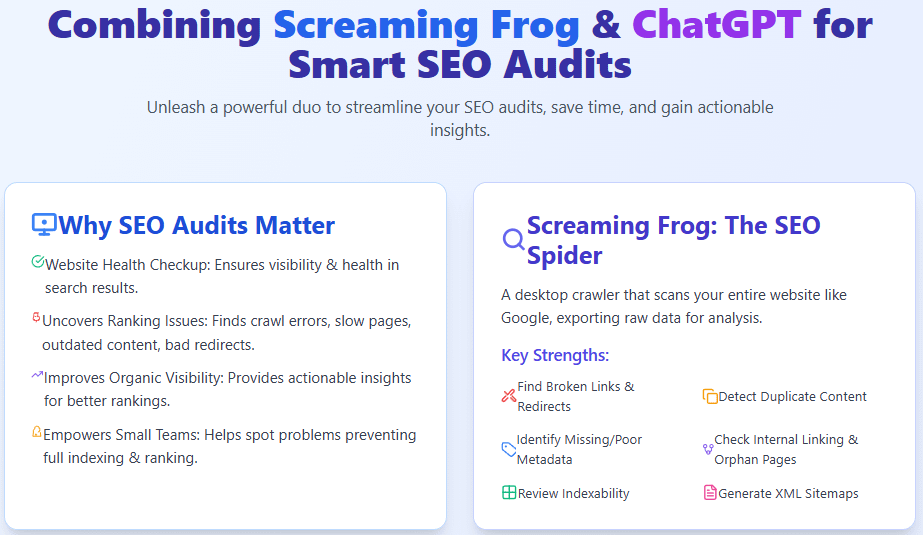

SEO audits are essential for making sure your website is visible and healthy in search results. An audit checks your site’s crawlability, content, and technical setup to uncover issues that could be hurting your rankings. In fact, well-executed SEO audits “provide actionable insights into improving your organic visibility”. For small businesses and content creators, regular audits help spot broken links, missing tags, duplicate pages, or poor content – problems that can prevent Google from fully indexing and ranking your pages. By combining a proven crawler tool like Screaming Frog and ChatGPT (AI assistance)), you can perform deeper, faster audits. Screaming Frog gathers all the raw data from your site (links, status codes, page titles, etc.), and then ChatGPT can read that data, answer natural-language questions, and suggest SEO fixes in plain English. This pairing makes SEO audits more intelligent, scalable, and accessible even if you’re not a technical specialist.

Why SEO Audits Matter for Your Website

Think of an SEO audit as a health checkup for your website. It uncovers what’s stopping your site from ranking well – from crawl errors and slow pages to outdated content or bad redirects. For example, Google’s Search Console has an Indexing report that shows which pages are crawled or blocked; similarly, Screaming Frog crawls every page and finds issues. By fixing these problems, you make your site more search-engine-friendly. As one SEO guide puts it: “What stops your site from ranking where you want in search results? That’s the question SEO audits try to answer”. In other words, audits uncover missing or faulty SEO elements (like meta tags or sitemaps) so you can update them, helping organic traffic and visibility. For a small team or solo creator, regularly auditing your site ensures that growth in content or pages doesn’t accidentally create hidden SEO problems.

Screaming Frog: The Swiss Army Knife for Technical SEO

Screenshot of Screaming Frog’s SEO Spider interface (dashboard of a site crawl, as shown on Zapier).

Screaming Frog’s SEO Spider is a desktop tool (Windows/Mac/Linux) that crawls your entire website the way Google does. It’s often called an “SEO spider” because it starts at your homepage and follows all links, mapping out every page. Even the free version crawls up to 500 URLs, which is plenty for many small sites. The interface looks like a spreadsheet: every row is a page URL, with columns for things like HTTP status, page titles, meta descriptions, H1s, word count, and more. This means you get a sortable overview of your site’s SEO elements. Screaming Frog easily exports to CSV or Excel, so you can take the crawl data anywhere.

Some key strengths of Screaming Frog for technical SEO include:

- Finding Broken Links and Redirects: It lists all 404 (Not Found) errors and redirect chains, so you can spot broken links. As MarketingSEO notes, Screaming Frog “helps audit your entire site, spotting broken links [and] faulty redirects”.

- Detecting Duplicate Content: The tool flags duplicate page titles, meta descriptions, and content (using hashes or by comparing text length). Duplicate content can dilute your SEO, so finding it is crucial.

- Identifying Missing or Poor Metadata: You can filter for pages with missing or too-long/short <title> or <meta description> tags, missing <h1> headings, or images without alt text. These basic on-page elements are important ranking signals.

- Checking Internal Linking and Orphan Pages: It shows the internal link count for each page and highlights orphan pages (pages not linked from anywhere). Internal links help SEO, so Screaming Frog helps you plan and fix linking.

- Reviewing Indexability: By integrating with Google Search Console or examining robots.txt and meta robots tags, you can confirm which pages are indexable by Google. For example, a page blocked by robots.txt or tagged noindex is flagged.

- Creating Sitemaps: Screaming Frog can generate XML sitemaps of your pages automatically. Having a sitemap helps search engines find and index your pages.

In short, Screaming Frog is like a giant “look under the hood” for your site. It organizes everything in a filterable table, so you can sort and find issues quickly. As one review notes, even the free plan lets you “analyze elements like broken links, metadata, hreflang attributes, and duplicate pages, plus sitemap generation”. And because it’s on your desktop, it’s quite powerful and configurable (you can adjust crawl speed, authentication, etc.).

Exporting and Reviewing Crawl Data

After running a crawl, Screaming Frog lets you export all the data for further analysis. For example, you can use the Bulk Export menu to save all images, all internal or external links, or all redirect chains as separate CSV files. The Internal and Overview tabs have “Export” buttons to dump the table of data. Typically you’ll export the “Internal All” or “Crawl Overview” CSV.

Once you have the data in a sheet or CSV, you can sort or filter it by any column (page path, title length, response code, etc.). For example, sort by “Title 1 Length” (meta title length) to find titles that are blank or too long. Sort by “Word Count” to find pages with very little text. Filter the “Status Code” column to see all 404s or 301 redirects. These exported tables are the raw material for analysis.

Tip: Use clear filenames when exporting (e.g. site-crawl-2025-07-13.csv) so you can track audit history. Save a copy of the raw crawl before you delete or clean any columns.

Because all data is in spreadsheet form, Screaming Frog’s exports are a perfect match for ChatGPT’s data analysis capabilities (which we’ll explain next). Before bringing data into ChatGPT, however, it’s best to prune any unneeded columns or rows so the file isn’t too large. As one SEO guide warns, a raw export can easily exceed ChatGPT’s limits (6MB). For example, remove columns you won’t use (e.g. if you don’t need Google Analytics data, hide the GA columns) and filter out irrelevant URLs. This “tidying up” makes the data easier for ChatGPT to process.

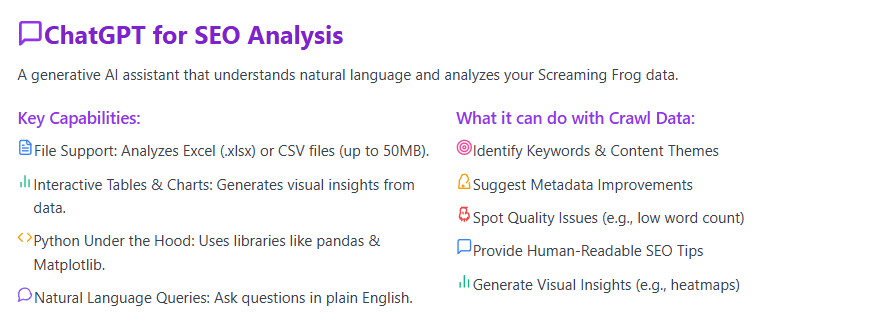

Introducing ChatGPT for SEO Analysis

ChatGPT (powered by OpenAI’s GPT-4) is a generative AI assistant that understands natural language and can analyze data. Think of it like a very smart helper who can read your spreadsheet or crawl report and answer questions about it. With GPT-4’s Advanced Data Analysis (formerly Code Interpreter) feature, you can upload a CSV or Excel file directly and ask ChatGPT to examine it. ChatGPT will parse the data (using Python/pandas behind the scenes) and even create charts or summary tables.

Some key points about ChatGPT’s data analysis capabilities:

- File Support: ChatGPT can analyze Excel (.xlsx) or CSV files you upload. It automatically shows you a data table and can apply filters or computations.

- Interactive Tables and Charts: After analyzing, ChatGPT can show interactive tables and generate charts (bar, pie, scatter, etc.) based on your data. It can even suggest the ideal chart type.

- Python Under the Hood: Behind the scenes, GPT-4 uses Python libraries like pandas and Matplotlib to crunch the numbers and draw graphics. You can view the code it generates for transparency.

- Volume of Data: You can upload files up to 50MB (about 512MB in some cases), which is handy for site audit data. Smaller sites with <50MB crawls are easy. Large enterprise crawls may need splitting.

Using ChatGPT for SEO means you can describe in natural language what you want to learn from the data. For example, you can ask, “Which pages have missing meta descriptions or duplicate content?” or “Prioritize these URLs by SEO urgency.” ChatGPT will read the spreadsheet columns and give you answers, lists, or even prose summaries.

According to the Advanced Data Analysis docs, you should prepare your data with descriptive column headers (no cryptic acronyms) so the AI can understand it. For instance, rename “MetaDesc” to “Meta Description” and “WC” to “Word Count.” This plain-language naming helps ChatGPT interpret each column correctly.

What ChatGPT Can Do with Crawl Data

ChatGPT can essentially automate the “analysis” part of an audit by reading Screaming Frog’s data. Here are some things ChatGPT can help with:

- Identify Keywords and Content Themes: ChatGPT can scan page titles, headings, and content columns to extract the primary and secondary keywords for each page, or suggest NLP/LSI keywords. (For example, it could take an “H1, Title, Meta” concatenation and list possible target keywords.)

- Improve Metadata: It can suggest improvements to titles and meta descriptions. For instance, you could prompt it: “Make these meta descriptions more compelling and include target keywords.” It can rewrite or augment them in bulk.

- Spot Quality Issues: Ask it to flag pages with very low word counts, shallow content, or missing headings. ChatGPT can generate a shortlist like “Pages with <300 words: /page1, /page2…” and explain why that’s a problem.

- Provide SEO Tips: It can review on-page data and give human-readable advice: e.g. “Page X has no H2 tags – consider adding subheadings to improve structure” or “Page Y’s title is identical to Page Z’s – revise one to avoid duplication.”

In short, ChatGPT turns your crawl spreadsheet into a conversational QA session. You don’t have to sift rows yourself; instead, you ask the AI and it tells you what it finds, often summarizing the most important issues. A user sharing an example found that ChatGPT can even turn a Screaming Frog report into Python-coded pivot tables and charts – e.g. creating an internal-linking heatmap from the data. In the example below, ChatGPT generated a visual “internal linking heatmap” showing which pages link to which (warmer colors mean more links):

Example chart generated by ChatGPT’s Advanced Data Analysis from crawl data – an “internal linking heatmap” showing source vs. destination pages.

This shows how ChatGPT can go beyond tables into visual insights. For most small-site audits you won’t need complex charts, but it’s powerful to know you can ask for them if needed (e.g. link distribution, crawl frequency over time, traffic vs. content size, etc.).

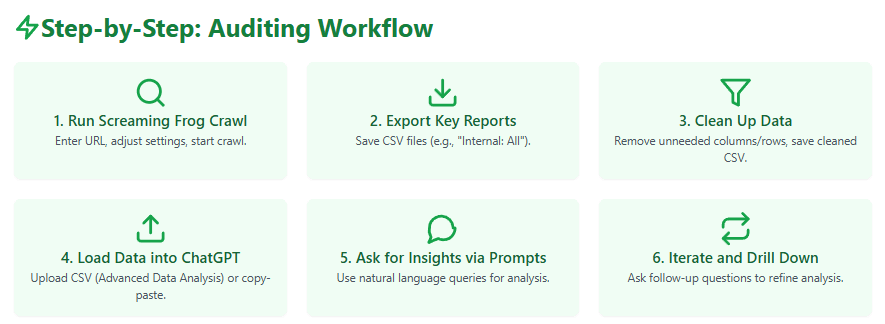

Step-by-Step: Auditing Workflow with Screaming Frog and ChatGPT

To combine Screaming Frog and ChatGPT in a practical audit, follow these steps:

- Run a Screaming Frog Crawl. Open SEO Spider and enter your website’s URL. Adjust settings if needed (e.g. limit crawl depth, ignore certain subdomains). Then start the crawl. Screaming Frog will spider your site and populate its tables.

- Export Key Reports. After the crawl finishes, use Bulk Export or the main window to save CSV files. Common exports include “Internal: All” (all page URLs with SEO data) and specific reports like “Redirects”, “Duplicate Pages”, or “Images” if relevant. Name them clearly (e.g. site_crawl_SEP2025.csv).

- Clean Up the Data. Open the CSV in Excel or a text editor. Remove any columns you won’t use (keep, for example: URL, Title, Meta Description, H1-1, Word Count, Status Code, Inlinks). Also filter out irrelevant URLs (e.g. non-HTML files, admin pages, etc.). The goal is to have a focused dataset that fits ChatGPT’s input limitsopace.agency. Save the cleaned CSV.

- Load Data into ChatGPT. – If you have ChatGPT-4 with Advanced Data Analysis: Upload the CSV file to ChatGPT. It will show a table preview. (If you use a free GPT-4 model, enable the “Advanced Data Analysis” mode and then use the file attachment button.) – If you only have free ChatGPT: You can copy-paste small sections of the CSV or even paste the entire content if it’s brief. For large sheets, ask ChatGPT to analyze specific columns or subsets.

- Ask for Insights via Prompts. Now you can talk to ChatGPT about the data. For example:

ruby

Edit

Copy

**Prompt:** “The attached spreadsheet has our site crawl data (URL, Page Title, Meta Description, H1, Word Count). Please identify all pages that have missing or duplicate meta titles, and suggest improvements.”

ChatGPT might reply with a list of URLs and notes like “Page A and Page B have the same title – consider making them unique with relevant keywords.” Or:ruby

Edit

Copy

**Prompt:** “Analyze this data and list the top 5 pages with word count under 250 words. For each, suggest a few keyword topics or content ideas to expand.”

It could output a table or bullet list of pages plus keyword suggestions. - Iterate and Drill Down. You can ask follow-up questions. For instance, “For Page A, what keywords does it currently target, and what other keywords could be relevant?” Or “Summarize the main content issues found in one paragraph.” Each prompt refines the analysis.

Here are some example ChatGPT prompts you might use (tailor them to your data):

- Analyze Content and Traffic:

scss

CopyEdit

“Here is a Screaming Frog crawl export with URL, Title, Meta Description, and Word Count. Identify the 10 pages with the highest traffic (we’ve marked them in a ‘traffic’ column) that have under 300 words. For each, suggest a few keywords or topics to target in expanding the content.” - Find Broken or Missing Elements:

css

CopyEdit

“From this data, list all URLs with status code 404 or redirect chains longer than 2. Also, flag any pages with missing <h1> tags or empty meta descriptions.” - Duplicate Content:

css

CopyEdit

“Which pages have duplicate meta descriptions? Provide a table of those URLs and suggest unique meta descriptions (30-160 characters) for each.” - Prioritize SEO Issues:

arduino

CopyEdit

“Looking at this crawl data, create a prioritized checklist of SEO fixes. Rank issues by impact (e.g., site-critical: broken links, missing titles; moderate: duplicate content; low: missing alt tags).” - Content Planning:

css

CopyEdit

“Generate a content plan from these keywords. We have these target keywords (attached). For each keyword group, suggest a blog post title and a short outline of subtopics.”

In practice, you can paste these prompts into ChatGPT’s interface (or in the ChatGPT code assistant chat) and let the model respond. These prompts show how natural language queries turn your Screaming Frog data into actionable insights. The AI basically becomes a smart assistant pointing out “priority 1: fix these broken links” or “consider adding content about X on these pages.”

Tip: Copying data into ChatGPT can be tricky if the file is large. For very large crawls, use ChatGPT-4’s file upload (Advanced Data Analysis) so you don’t hit the input limit. If stuck on an error, trim the data more, or break it into smaller chunks (e.g. analyze one section of the site at a time).

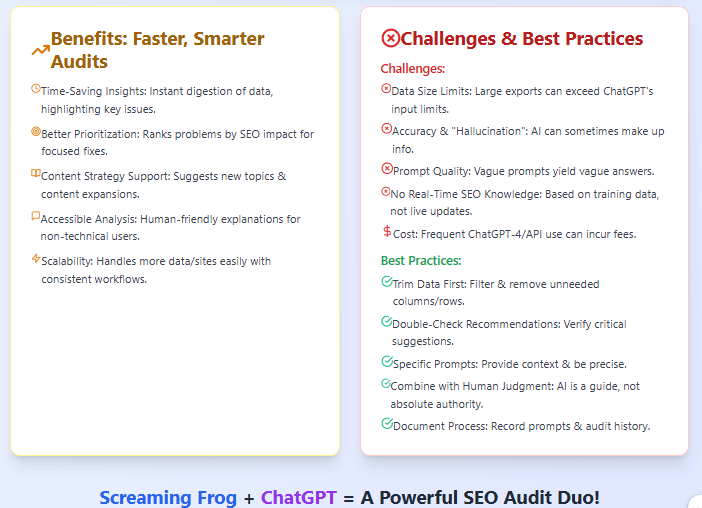

Benefits: Faster, Smarter Audits for Small Teams

Combining Screaming Frog with ChatGPT offers big advantages, especially for small businesses or solo content creators:

- Time-Saving Insights: Instead of manually sifting through hundreds of rows, ChatGPT instantly digests the data and highlights key issues. This cuts down the “grunt work” in an audit. You get instant answers like “these 20 pages need title tags” rather than hunting them yourself.

- Better Prioritization: ChatGPT can rank problems by SEO impact. For example, it can tell you which issues are site-wide (high priority) versus page-specific. This helps small teams focus on fixes that move the needle on traffic quickly.

- Content Strategy Support: By analyzing word counts and keywords, ChatGPT can suggest new topics or content expansions. If your audit finds a page with very thin content, ask ChatGPT to generate a list of relevant subtopics or keywords – essentially helping you plan blog posts or landing page improvements.

- Accessible Analysis: Non-technical users can simply ask ChatGPT for human-friendly explanations. Even if you don’t know SQL or Excel well, you can say “explain this data” or “what does this crawl show” and get a plain-English summary.

- Scalability: Once you have a workflow, it can handle more data or more sites easily. Screaming Frog can crawl multiple sites (or sections) via command-line scheduling, and ChatGPT can analyze new data with similar prompts. A single person can run many audits because the AI does the heavy lifting.

For example, if you find dozens of orphan pages (unlinked pages), you might ask ChatGPT: “Which of these unlinked pages could be consolidated or have links added? Give me an action plan.” It might reply with logical groupings of content and suggest where to add internal links or if some pages should be merged. Without GPT, doing that grouping manually would be laborious.

Automation Options

- Screaming Frog Scheduling: The SEO Spider supports a command-line mode and Windows Task Scheduler (or cron on Mac/Linux) to run crawls automatically. You can set up nightly or weekly crawls and have the reports saved to a folder. This way, you get updated CSVs without clicking anything.

- ChatGPT Integration: Screaming Frog’s latest versions allow direct integration with the OpenAI API. With this, you can actually configure prompts inside Screaming Frog and have ChatGPT run during the crawl (for example, to auto-generate alt text for images). This is advanced, but it means the audit can be partly automated without leaving the app.

- OpenAI API: For technically inclined users, you can use OpenAI’s API to automate analysis. A Python script (similar to [53] behind the scenes) could take the CSV, send it to ChatGPT, and parse the answers. This requires coding and an OpenAI key, but it scales.

- Plugins and Tools: There are ChatGPT plugins (in the GPT store) for data analysis or for SEO tasks, and third-party tools (like some AIPRM prompts) that combine SEO tools with GPT. These can streamline the process.

Overall, the workflow can go from an exported Screaming Frog file to ChatGPT in just a few clicks (or a scheduled job), which is much faster than doing each step manually.

Challenges and Best Practices

No solution is perfect. Here are some limitations to watch and tips to overcome them:

- Data Size Limits: ChatGPT (especially free GPT-3.5/4) has input limits. Large SEOSpider exports can easily exceed those limits (e.g. >6MB will fail). Workaround: Filter and trim the data first. Remove unneeded columns, apply Screaming Frog’s filters during export (e.g. export only HTML pages). For massive sites, split the site into sections and crawl separately. Using ChatGPT-4 with Advanced Data Analysis (which allows larger uploads) is also a good solution if you can access it.

- Accuracy and “Hallucination”: ChatGPT tries to produce plausible text, but it can sometimes “hallucinate” (i.e. make up) information. Always double-check critical recommendations. For example, if it suggests a meta description, verify it’s relevant. Use ChatGPT’s answers as a guide, not an absolute authority.

- Prompt Quality: The quality of output depends on your prompts. Vague prompts yield vague answers. Provide context in your prompt, and be specific about what you want. For example, explicitly mention column names and formats. The OpenAI guide advises using descriptive column headers and plain language to improve analysis. For instance, rename “Content1” to “Main Content Body”.

- No Real-Time SEO Knowledge: ChatGPT doesn’t know your site’s goals or brand style. It will base answers only on the data you give it and general SEO best practices. Combine its suggestions with your judgment. Also, GPT’s training knowledge cuts off (for GPT-4 around 2023), so it might not reflect the absolute latest SEO algorithm changes. Use Google Search Central guidelines or SEO news for the latest practices.

- Cost: Frequent use of ChatGPT-4 or its API can incur costs. For occasional audits it’s fine, but if you plan to automate fully, factor in API usage fees. Consider batching questions or using GPT-3.5 for simpler tasks to save cost.

- Consistency and Documentation: To ensure audit consistency, keep a record of your process. Use the same prompt templates each time. Document which ChatGPT model you used (GPT-3.5, GPT-4, etc.) since answers can vary. Also save ChatGPT’s response logs along with the crawl data for future reference.

Best Practice: After ChatGPT gives recommendations, always export a CSV or report from Screaming Frog for key issues (like broken links or orphan pages). Then run another crawl after fixes to confirm the issues are resolved. This closes the loop. Also, cross-reference with Google Search Console – if ChatGPT says a page had indexing issues, check GSC’s Coverage report to see if it matches.

Putting It All Together

By now, you should have a clear picture of how Screaming Frog and ChatGPT complement each other in an SEO audit:

- Screaming Frog gathers the technical and on-page SEO data from your site crawl, highlighting problems in bulk (broken links, missing tags, duplicate content, etc.). It’s excellent at collecting exhaustive crawl data.

- ChatGPT takes that structured data and adds intelligence. You can ask it to interpret the data in plain English, prioritize fixes, generate content ideas, and create summaries or tables. For example, it can tell you which fixes have the highest SEO impact and even draft new metadata or content snippets to fix issues.

This combination lets non-technical users perform “smart audits.” You don’t need to read every line of the crawl; you just ask the AI. It’s like having an SEO analyst on your team who works 24/7 and can handle spreadsheets instantly.

Strategic Tips:

- Internal Linking for Audits: After fixing issues, use the Screaming Frog crawl to spot new internal linking opportunities. ChatGPT can help here too: for example, prompt it to suggest pages that should link to each other based on content. Internal linking boosts SEO and CTA conversions.

- Content Planning: Ask ChatGPT to find “content gaps” by comparing your keywords to crawl data. It might suggest blog topics based on missing keywords that your competitors use (if you feed it competitor URLs too).

- Monitoring Over Time: Run these combined audits periodically (monthly or quarterly). Compare the audit reports over time: ChatGPT can also help generate a “progress report” from older and newer crawl data.

Outbound Resources: For more on SEO audits and best practices, see Google’s Search Console help (e.g. understanding indexing reports) and Moz’s SEO guides for technical audits. Screaming Frog’s own site is invaluable – they have documentation on configuration and examples of ChatGPT integration (see screamingfrog.co.uk and their blog). OpenAI’s documentation explains how to use Advanced Data Analysis for spreadsheets. Leveraging these authoritative resources alongside the workflow above will help ensure accuracy.

Internal Resources: Check out our own MarketingSEO.in technical SEO page for similar case studies (we regularly use Screaming Frog to find broken links, duplicate content, and redirects). Also, Dr. Anubhav Gupta’s Elgorythm blog has an Ultimate Guide to Screaming Frog SEO Spider that covers many advanced features (worth a read to master the tool).

In summary, Screaming Frog + ChatGPT = a powerful SEO audit duo. Screaming Frog exhaustively scans and reports on your site’s technical health, and ChatGPT intelligently digests that report into clear, prioritized recommendations. Together they save time, improve decision-making, and help small teams punch above their weight on SEO. By following best practices—clean data, good prompts, and manual verification—you can confidently use this combo to keep your site optimized and your content strategy data-driven.

Frequently Asked Questions:

How can Screaming Frog and ChatGPT work together for SEO audits?

Screaming Frog collects detailed crawl data about your website, such as broken links, duplicate content, and missing metadata. ChatGPT can then read that data, answer natural language questions, and suggest SEO improvements—making audits smarter and faster for small teams.

Is ChatGPT accurate for analyzing SEO crawl reports?

ChatGPT is highly effective for interpreting structured data like Screaming Frog exports. While it provides insightful suggestions, it’s best used alongside human review and other SEO tools to ensure accuracy and context-specific decisions.

Can beginners use Screaming Frog and ChatGPT for SEO audits?

Yes, this combination is perfect for beginners. Screaming Frog captures the technical data, and ChatGPT explains the issues in plain language, helping even non-technical users identify and fix SEO problems confidently.

What types of SEO issues can ChatGPT help identify from Screaming Frog data?

ChatGPT can help find and explain issues such as missing title tags, low word count, duplicate meta descriptions, poor internal linking, and orphan pages. It can also suggest content improvements and prioritize tasks based on SEO impact.