Introduction

A data-driven approach can be a game-changer for websites. It’s all about measuring the impact of SEO A/B testing on your traffic. If you’re a business owner or marketer wondering how to A/B test SEO changes, you’re in the right place. In this friendly guide, you’ll learn what SEO A/B testing and SEO split testing are, why they matter, and exactly how to A/B test SEO changes step by step. You’ll see both terms used throughout the guide – SEO split testing is essentially another name for an SEO experiment on multiple pages.

“Continuous SEO testing is the lifeblood of digital marketing success,” says Rand Fishkin.

We’ll also look at a sample testing table and key metrics and tools to track your results. Let’s get started by defining SEO A/B testing.

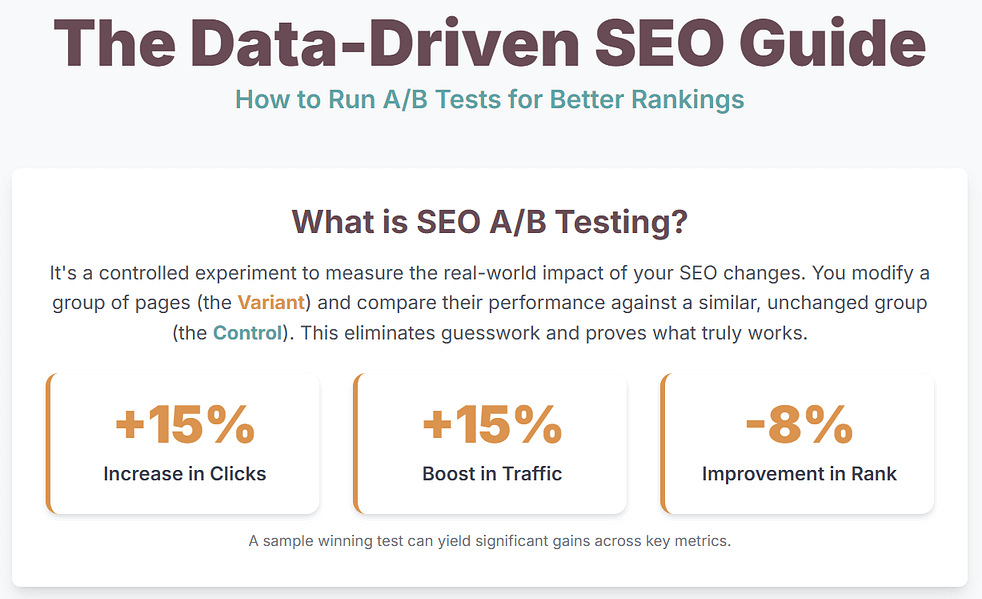

What Is SEO A/B Testing?

SEO testing (sometimes called SEO A/B testing or SEO split testing) involves making controlled changes to a group of pages and comparing them to a similar control group of unchanged pages. For example, you might update the title tag or content on 25 product pages (the variant) and compare their organic traffic and rankings to 25 similar pages left unchanged (the control). This experiment shows exactly how your alteration affects traffic, clicks, and rankings instead of leaving it to guesswork. In practice, SEOs usually use a subset of pages (pages with similar content or template) to avoid bias. For broader site changes, SEO split testing (grouping many pages together) is often used. Both terms are used in the industry, and this guide will treat them as the same basic idea. The next sections will explain exactly how to A/B test SEO changes effectively on your site.

SEO Split Testing vs Traditional A/B Testing

These concepts sound alike but differ in approach. Traditional A/B testing (used by CRO specialists) means creating two versions of the same page and splitting user traffic between them. SEO split testing, by contrast, treats each page as its own URL. You group multiple pages into “control” and “variant” buckets, change the SEO elements on all variant pages, and then see how those pages perform vs. control. For instance, you might take 40 blog posts, keep 20 unchanged (control) and tweak titles on the other 20 (variant). Google then crawls each URL separately, and you measure the aggregate impact of the change.

Put simply: if you change one button colour or copy and test on one URL, that’s a traditional A/B test. If you update the title or H1 on dozens of similar pages and compare traffic, that’s SEO split testing. Importantly, you must follow SEO-friendly methods: use 302 redirects or rel=canonical on variant pages so Google knows it’s an experiment. Google even emphasizes that small tests (like button tweaks or headline changes) “often have little or no impact” on rankings. In fact, experts note “A/B testing, when done correctly, doesn’t have to hurt your SEO”. The key is not to cloak or trick Google — just test and measure changes safely.

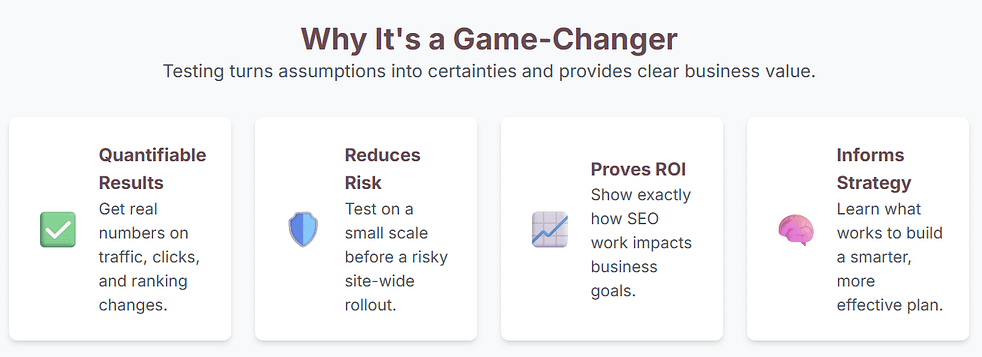

Why the Impact of SEO A/B Testing Matters

Measuring the impact of SEO A/B testing turns vague guesses into real insights. Without tests, you’re guessing which changes help. Each experiment provides evidence. Even a small tweak (like rewriting a title or adding a keyword) can produce a surprisingly large lift in clicks or traffic. For example, a title change might boost click-through rate (CTR) by double digits, leading to many more visitors.

Consider the numbers: if your site typically converts 2–4% of visitors, a 10–20% increase in organic traffic (a common gain from a winning SEO test) can mean dozens more leads or sales each month. In our sample test below, the variant pages saw +15% more sessions. This illustrates exactly the impact of SEO A/B testing: you get quantifiable gains rather than guesswork.

“Without data, all SEO changes are just guesses,” says Neil Patel. Testing confirms what really works.

In short, knowing the impact of SEO A/B testing on your rankings, traffic, and conversions lets you invest effort in the strategies that truly move the needle.

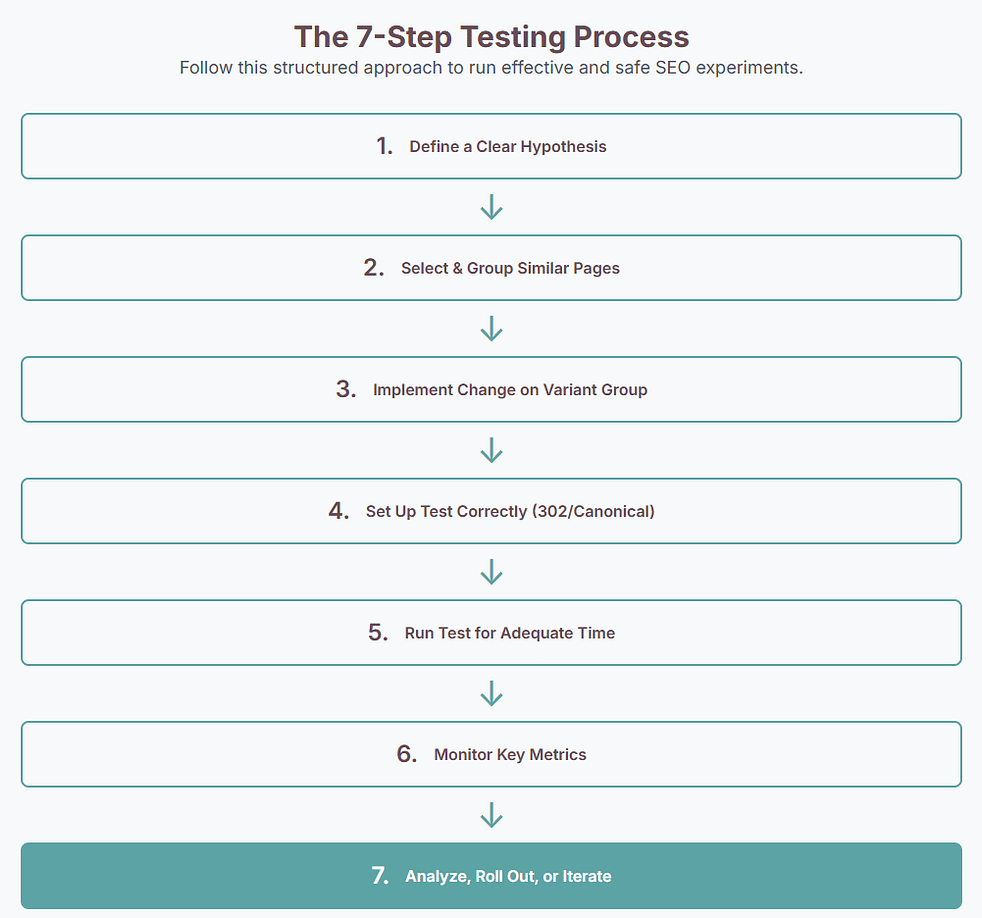

How to A/B Test SEO Changes – Step-by-Step

Let’s break down how to A/B test SEO changes in a clear, step-by-step process. Follow each step to set up your SEO split tests correctly:

- Define a Clear Hypothesis. Decide exactly what change you want to test and why. For example: “I believe adding our brand name to the title tag of these category pages will increase CTR.” A focused hypothesis gives your test direction and a metric to compare.

- Select Your Pages (Control vs. Variant). Choose a set of pages that are similar (same template or topic). Split them into two groups: control (no changes) and variant (where you apply the change). Ensure both groups have similar traffic patterns. Proper bucketing is essential: do not accidentally put all seasonal or promotional pages in one group. In one case, a site learned the hard way when “cat” product pages were all in one bucket during International Cat Day, giving a false uplift. To run SEO A/B testing correctly, randomize pages so external spikes affect both buckets equally.

- Implement the Change on Variant Pages. Apply your SEO edit (new title tag, updated meta description, improved content, schema markup, etc.) only to the variant pages. Keep the control pages exactly as they were. The change should be the only difference between groups so you can attribute any lift to that change.

- Set Up the SEO Test Properly. Use SEO-friendly testing methods. Typically, you serve the variant content at a unique URL (not visible to users by default) and use a 302 redirect or a canonical tag pointing to the original. This tells Google, “I’m testing a new version temporarily.” Tools like Google Optimize (with rel=canonical) or SEO platforms handle this. The goal is to avoid permanent duplicates. Google’s guidelines allow such experiments as long as they’re not cloaked.

- Run the Test for an Adequate Time. Let your experiment run long enough to gather meaningful data. Depending on your traffic volume, this might be several weeks or months. Include full business cycles (holidays, weekly patterns) so you capture normal fluctuations. Ending too early is a common pitfall — always ensure statistical confidence before declaring a winner.

- Monitor Key Metrics. Focus on the metrics that reflect the change. The primary metric in SEO split testing is usually organic traffic (sessions) to those pages. It combines effects of rank changes and CTR. Also track click-through rate (CTR) from search results, average position, and any business metrics (bounce rate, conversions) for those pages. Use Google Analytics (for sessions and conversions) and Google Search Console (for impressions/CTR) to gather data. Remember: organic traffic is the “North Star” of SEO experiments.

- Analyze the Results. After the test period, compare the performance of variant vs. control. Use a statistical significance calculator (e.g. Optimizely’s or Neil Patel’s calculator) to be sure any differences aren’t due to chance. If the variant group has significantly higher traffic or rankings than control, you’ve likely found a winning change. If not, no problem — it just means that particular tweak didn’t move the needle.

- Roll Out or Iterate. If you have a clear winner, apply the change more broadly (to all pages of that type). If the test was inconclusive or lost, analyze why and consider testing a different variation. Always document results so you remember what worked and what didn’t. Each test (even “failed” ones) increases your SEO knowledge.

By following these steps, you’ll know exactly how to A/B test SEO changes without breaking any rules. It’s a repeatable process: hypothesize, test, learn, and then test again.

Key Metrics and Tools

When running SEO experiments, some metrics and tools stand out:

- Organic Sessions: This is usually your primary metric. It captures total visitors gained from search.

- Click-Through Rate (CTR): A higher CTR on search results often translates to more clicks.

- Average Position: An upward shift (lower number) can explain traffic gains.

- Bounce Rate & Conversions: Track engagement and business outcomes to ensure changes help overall goals.

- Statistical Tools: Use an online significance calculator to know when results are valid.

- Analytics Platforms: Google Analytics or similar to measure sessions and goals.

- SEO Split Testing Tools: Specialized tools (SearchPilot, SEOTesting.com, seoClarity’s Split Tester) automate bucketing and tagging for you.

- A/B Test Platforms: Tools like VWO or Optimizely can run split-URL tests if configured for SEO.

- Educational Resources: If you ever wonder “how to A/B test SEO changes”, plenty of tutorials and case studies are available online. Use them to refine your approach.

Using the right metrics and tools ensures you can quantify the impact of SEO A/B testing and manage experiments at scale. Always focus on real traffic changes rather than vanity metrics.

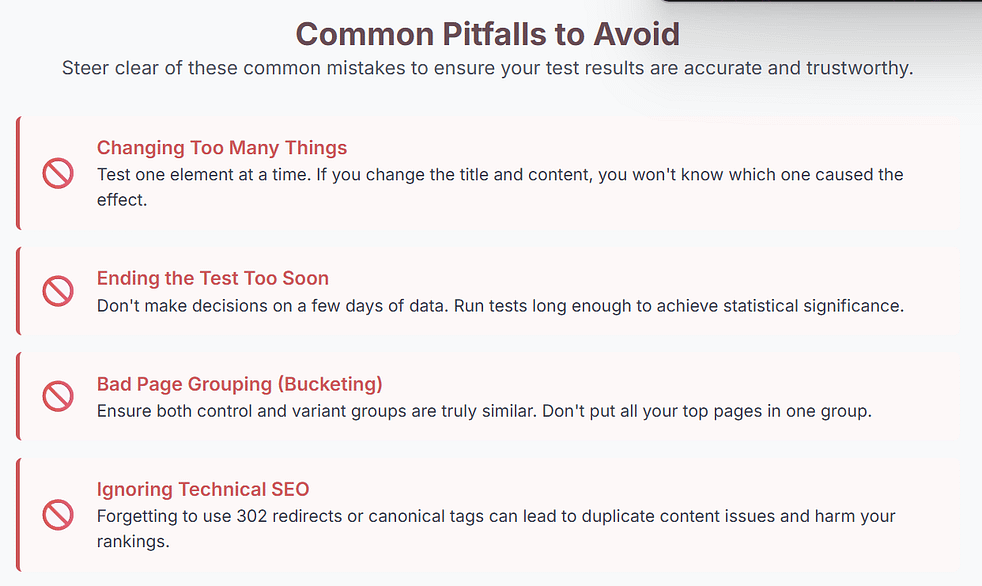

Common Pitfalls to Avoid

Even experienced SEOs can stumble when starting SEO split tests. Watch out for these traps:

- Skipping the Test: Don’t just guess at changes. Learn how to A/B test SEO changes first. Random tweaks without measurement is a waste of effort.

- Insufficient Traffic or Time: Ending a test too soon or with too little data can lead to false conclusions. Ensure each bucket gets enough traffic and run long enough for statistical confidence.

- Bad Bucketing: Avoid grouping similar pages together. Spread different types of pages evenly between control and variant. If external events (holiday, news) spike one group, results will be skewed.

- Cloaking/Duplicate Content: Never hide variant content from Google. Always use 302 redirects or canonical tags on test pages. If done incorrectly, Google might see it as cloaking or duplicate content.

- Changing Too Many Things: Test one element at a time (only titles, or only content). If you edit multiple elements at once, you won’t know which change actually caused any effect.

- Ignoring External Factors: Run tests outside of big algorithm update windows or major marketing campaigns. Ensure seasonality (daily, weekly patterns) affects both groups equally.

- Neglecting the “Impact”: Always ask “what was the impact of SEO A/B testing on the results?” After a test, compare metrics side-by-side. Otherwise you’ll never see the value of what you did.

Avoiding these pitfalls will make your SEO split testing smooth and trustworthy. Remember: every experiment tells you something valuable, even if the change doesn’t win.

Sample Testing Table

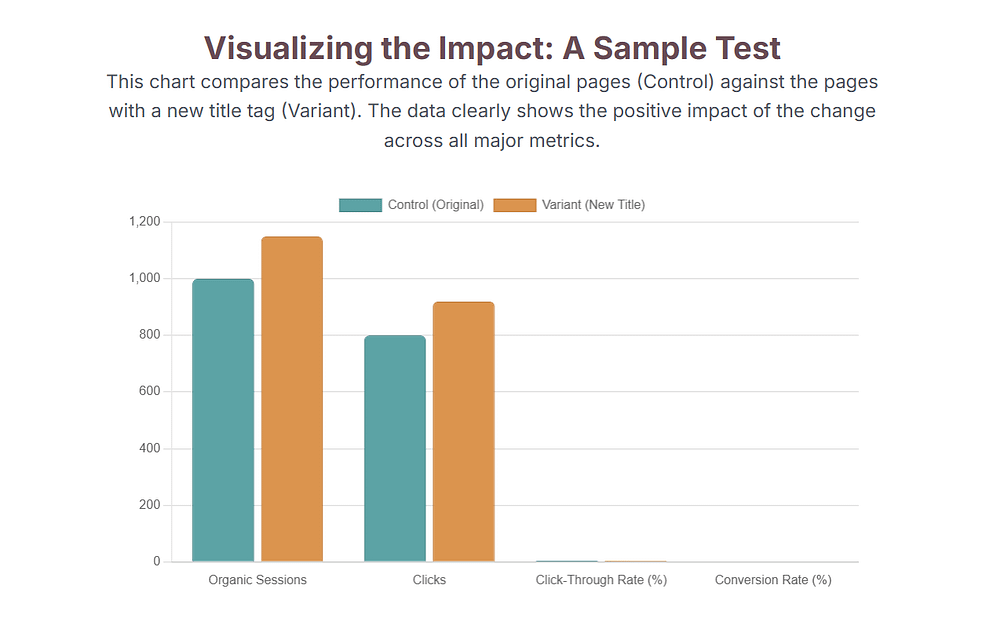

As a concrete example, suppose we tested new title tags on some category pages. After a month, the data might look like this:

Metric | Control (Original) | Variant (New Title) | Change |

Organic Sessions | 1,000 | 1,150 | +15% |

Impressions | 20,000 | 21,000 | +5% |

Clicks | 800 | 920 | +15% |

Click-Through Rate | 4.0% | 4.4% | +10% |

Avg. Position | 10.0 | 9.2 | –8% |

Bounce Rate | 65% | 60% | –7% |

Conversion Rate | 2.0% | 2.3% | +15% |

In this fictional test, the Variant pages (with the new title) saw about 15% more sessions and clicks, along with higher CTR and a slight ranking boost. This table clearly shows the impact of SEO A/B testing on the site’s traffic. In other words, it quantifies how much the SEO change moved the needle.

Final Thoughts

SEO is part art, part science, and SEO A/B testing is where the science comes in. By systematically testing title tags, content updates, or other SEO elements, you remove guesswork from your strategy. We’ve covered the process of how to A/B test SEO changes from start to finish: forming a hypothesis, running the test, and analyzing real data. We also highlighted the key metrics, tools, and common traps.

The key takeaway: Always test your ideas. Start with small changes, measure their impact, then build on what you learn. Over time, these data-driven wins add up. Remember, even a small lift on many pages can significantly improve your bottom line. The impact of SEO A/B testing can be profound: one successful experiment can double traffic for certain queries, while failures tell you what not to spend time on.

Now it’s your turn: pick an SEO change to test, set up your control and variant groups, and let the data speak. How to A/B test SEO changes? Follow the steps above, and you’ll find out. Happy testing!

FAQs

Q: Can SEO A/B testing hurt my rankings?

A: When done correctly, SEO split tests should not harm rankings. Use 302 redirects or canonical tags on variant pages, and never cloak content. Google says small test changes “often have little or no impact” on ranking. By following guidelines, you protect your site while measuring results.

Q: How long should I run an SEO split test?

A: Run it long enough to gather reliable data. This typically means several weeks or a few months, depending on traffic volume. Make sure you capture enough organic visits to each bucket (and a full business cycle) so your results are statistically significant.

Q: What tools can help with SEO A/B testing?

A: Google Analytics and Search Console are must-haves for tracking results. For setup, SEO-specific platforms like SearchPilot or SEOTesting.com automate the process. General A/B testing tools (VWO, Optimizely, Google Optimize) can also run split-URL tests when configured properly.

Q: Is it OK to test multiple SEO elements at once?

A: It’s best to change only one element per test (e.g. only the title tag or only the meta description). If you change too many things at once, you won’t know which one drove any improvement. Keep tests focused so you learn exactly what impacts the metrics.